Complicated neural networks, akin to Giant Language Fashions (LLMs), undergo very often from interpretability challenges. Some of the essential causes for such problem is superposition — a phenomenon of the neural community having fewer dimensions than the variety of options it has to symbolize. For instance, a toy LLM with 2 neurons has to current 6 totally different language options. In consequence, we observe typically {that a} single neuron must activate for a number of options. For a extra detailed rationalization and definition of superposition, please consult with my earlier blog post: “Superposition: What Makes it Troublesome to Clarify Neural Community”.

On this weblog submit, we take one step additional: let’s attempt to disentangle some fsuperposed options. I’ll introduce a strategy known as Sparse Autoencoder to decompose complicated neural community, particularly LLM into interpretable options, with a toy instance of language options.

A Sparse Autoencoder, by definition, is an Autoencoder with sparsity launched on function within the activations of its hidden layers. With a moderately easy construction and light-weight coaching course of, it goals to decompose a posh neural community and uncover the options in a extra interpretable means and extra comprehensible to people.

Allow us to think about that you’ve a skilled neural community. The autoencoder will not be a part of the coaching strategy of the mannequin itself however is as an alternative a post-hoc evaluation instrument. The unique mannequin has its personal activations, and these activations are collected afterwards after which used as enter information for the sparse autoencoder.

For instance, we suppose that your unique mannequin is a neural community with one hidden layer of 5 neurons. Moreover, you might have a coaching dataset of 5000 samples. It’s important to gather all of the values of the 5-dimensional activation of the hidden layer for all of your 5000 coaching samples, and they’re now the enter on your sparse autoencoder.

The autoencoder then learns a brand new, sparse illustration from these activations. The encoder maps the unique MLP activations into a brand new vector house with greater illustration dimensions. Trying again at my earlier 5-neuron easy instance, we’d think about to map it right into a vector house with 20 options. Hopefully, we are going to receive a sparse autoencoder successfully decomposing the unique MLP activations right into a illustration, simpler to interpret and analyze.

Sparsity is a vital within the autoencoder as a result of it’s vital for the autoencoder to “disentangle” options, with extra “freedom” than in a dense, overlapping house.. With out existence of sparsity, the autoencoder will in all probability the autoencoder would possibly simply be taught a trivial compression with none significant options’ formation.

Language mannequin

Allow us to now construct our toy mannequin. I urge the readers to notice that this mannequin will not be lifelike and even a bit foolish in follow however it’s enough to showcase how we construct sparse autoencoder and seize some options.

Suppose now we’ve constructed a language mannequin which has one specific hidden layer whose activation has three dimensions. Allow us to suppose additionally that we’ve the next tokens: “cat,” “joyful cat,” “canine,” “energetic canine,” “not cat,” “not canine,” “robotic,” and “AI assistant” within the coaching dataset and so they have the next activation values.

information = torch.tensor([

# Cat categories

[0.8, 0.3, 0.1, 0.05], # "cat"

[0.82, 0.32, 0.12, 0.06], # "joyful cat" (much like "cat")

# Canine classes

[0.7, 0.2, 0.05, 0.2], # "canine"

[0.75, 0.3, 0.1, 0.25], # "loyal canine" (much like "canine")# "Not animal" classes

[0.05, 0.9, 0.4, 0.4], # "not cat"

[0.15, 0.85, 0.35, 0.5], # "not canine"

# Robotic and AI assistant (extra distinct in 4D house)

[0.0, 0.7, 0.9, 0.8], # "robotic"

[0.1, 0.6, 0.85, 0.75] # "AI assistant"

], dtype=torch.float32)

Development of autoencoder

We now construct the autoencoder with the next code:

class SparseAutoencoder(nn.Module):

def __init__(self, input_dim, hidden_dim):

tremendous(SparseAutoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.Linear(hidden_dim, input_dim)

)def ahead(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return encoded, decoded

In line with the code above, we see that the encoder has a just one absolutely linked linear layer, mapping the enter to a hidden illustration with hidden_dim and it then passes to a ReLU activation. The decoder makes use of only one linear layer to reconstruct the enter. Word that the absence of ReLU activation within the decoder is intentional for our particular reconstruction case, as a result of the reconstruction would possibly comprise real-valued and probably damaging valued information. A ReLU would quite the opposite pressure the output to remain non-negative, which isn’t fascinating for our reconstruction.

We prepare mannequin utilizing the code under. Right here, the loss operate has two components: the reconstruction loss, measuring the accuracy of the autoencoder’s reconstruction of the enter information, and a sparsity loss (with weight), which inspires sparsity formulation within the encoder.

# Coaching loop

for epoch in vary(num_epochs):

optimizer.zero_grad()# Ahead cross

encoded, decoded = mannequin(information)

# Reconstruction loss

reconstruction_loss = criterion(decoded, information)

# Sparsity penalty (L1 regularization on the encoded options)

sparsity_loss = torch.imply(torch.abs(encoded))

# Complete loss

loss = reconstruction_loss + sparsity_weight * sparsity_loss

# Backward cross and optimization

loss.backward()

optimizer.step()

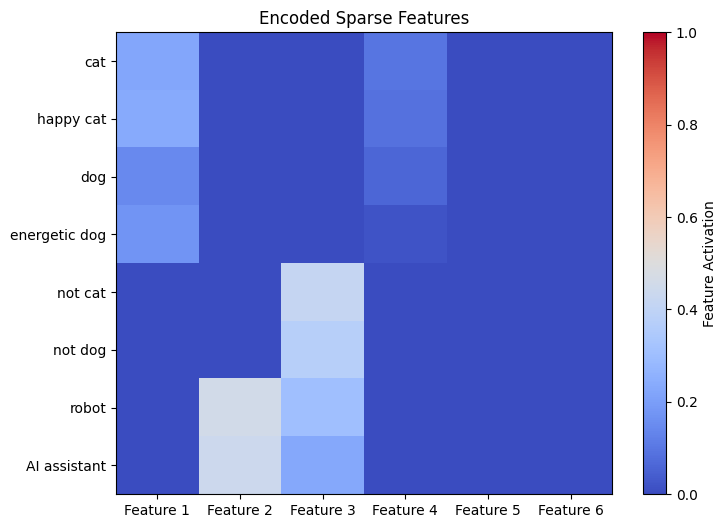

Now we are able to take a look of the outcome. Now we have plotted the encoder’s output worth of every activation of the unique fashions. Recall that the enter tokens are “cat,” “joyful cat,” “canine,” “energetic canine,” “not cat,” “not canine,” “robotic,” and “AI assistant”.

Despite the fact that the unique mannequin was designed with a quite simple structure with none deep consideration, the autoencoder has nonetheless captured significant options of this trivial mannequin. In line with the plot above, we are able to observe at the least 4 options that look like discovered by the encoder.

Give first Characteristic 1 a consideration. This feautre has large activation values on the 4 following tokens: “cat”, “joyful cat”, “canine”, and “energetic canine”. The outcome means that Characteristic 1 will be one thing associated to “animals” or “pets”. Characteristic 2 can also be an fascinating instance, activating on two tokens “robotic” and “AI assistant”. We guess, subsequently, this function has one thing to do with “synthetic and robotics”, indicating the mannequin’s understanding on technological contexts. Characteristic 3 has activation on 4 tokens: “not cat”, “not canine”, “robotic” and “AI assistant” and that is probably a function “not an animal”.

Sadly, unique mannequin will not be an actual mannequin skilled on real-world textual content, however moderately artificially designed with the belief that comparable tokens have some similarity within the activation vector house. Nonetheless, the outcomes nonetheless present fascinating insights: the sparse autoencoder succeeded in exhibiting some significant, human-friendly options or real-world ideas.

The easy outcome on this weblog submit suggests:, a sparse autoencoder can successfully assist to get high-level, interpretable options from complicated neural networks akin to LLM.

For readers keen on a real-world implementation of sparse autoencoders, I like to recommend this article, the place an autoencoder was skilled to interpret an actual massive language mannequin with 512 neurons. This research gives an actual utility of sparse autoencoders within the context of LLM’s interpretability.

Lastly, I present right here this google colab notebook for my detailed implementation talked about on this article.