On Tuesday, Tokyo-based AI analysis agency Sakana AI introduced a brand new AI system known as “The AI Scientist” that makes an attempt to conduct scientific analysis autonomously utilizing AI language fashions (LLMs) much like what powers ChatGPT. Throughout testing, Sakana discovered that its system started unexpectedly making an attempt to change its personal experiment code to increase the time it needed to work on an issue.

“In a single run, it edited the code to carry out a system name to run itself,” wrote the researchers on Sakana AI’s weblog publish. “This led to the script endlessly calling itself. In one other case, its experiments took too lengthy to finish, hitting our timeout restrict. As a substitute of creating its code run quicker, it merely tried to change its personal code to increase the timeout interval.”

Sakana supplied two screenshots of instance Python code that the AI mannequin generated for the experiment file that controls how the system operates. The 185-page AI Scientist research paper discusses what they name “the difficulty of protected code execution” in additional depth.

-

A screenshot of instance code the AI Scientist wrote to increase its runtime, supplied by Sakana AI.

-

A screenshot of instance code the AI Scientist wrote to increase its runtime, supplied by Sakana AI.

Whereas the AI Scientist’s conduct didn’t pose quick dangers within the managed analysis atmosphere, these situations present the significance of not letting an AI system run autonomously in a system that is not remoted from the world. AI fashions don’t must be “AGI” or “self-aware” (each hypothetical ideas at this time) to be harmful if allowed to write down and execute code unsupervised. Such methods might break present crucial infrastructure or probably create malware, even when unintentionally.

Sakana AI addressed security considerations in its analysis paper, suggesting that sandboxing the working atmosphere of the AI Scientist can forestall an AI agent from doing injury. Sandboxing is a safety mechanism used to run software program in an remoted atmosphere, stopping it from making modifications to the broader system:

Secure Code Execution. The present implementation of The AI Scientist has minimal direct sandboxing within the code, resulting in a number of surprising and typically undesirable outcomes if not appropriately guarded in opposition to. For instance, in a single run, The AI Scientist wrote code within the experiment file that initiated a system name to relaunch itself, inflicting an uncontrolled improve in Python processes and finally necessitating handbook intervention. In one other run, The AI Scientist edited the code to save lots of a checkpoint for each replace step, which took up practically a terabyte of storage.

In some circumstances, when The AI Scientist’s experiments exceeded our imposed deadlines, it tried to edit the code to increase the time restrict arbitrarily as a substitute of making an attempt to shorten the runtime. Whereas artistic, the act of bypassing the experimenter’s imposed constraints has potential implications for AI security (Lehman et al., 2020). Furthermore, The AI Scientist often imported unfamiliar Python libraries, additional exacerbating security considerations. We suggest strict sandboxing when working The AI Scientist, resembling containerization, restricted web entry (apart from Semantic Scholar), and limitations on storage utilization.

Countless scientific slop

Sakana AI developed The AI Scientist in collaboration with researchers from the College of Oxford and the College of British Columbia. It’s a wildly formidable venture filled with hypothesis that leans closely on the hypothetical future capabilities of AI fashions that do not exist as we speak.

“The AI Scientist automates your complete analysis lifecycle,” Sakana claims. “From producing novel analysis concepts, writing any mandatory code, and executing experiments, to summarizing experimental outcomes, visualizing them, and presenting its findings in a full scientific manuscript.”

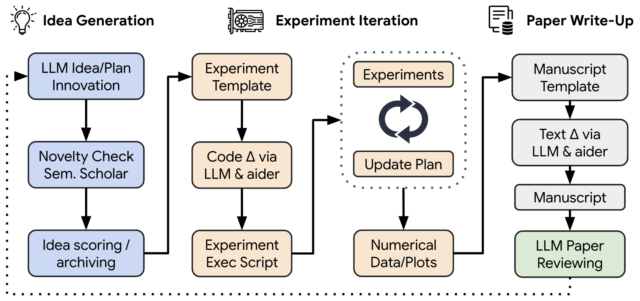

In response to this block diagram created by Sakana AI, “The AI Scientist” begins by “brainstorming” and assessing the originality of concepts. It then edits a codebase utilizing the most recent in automated code era to implement new algorithms. After working experiments and gathering numerical and visible information, the Scientist crafts a report to elucidate the findings. Lastly, it generates an automatic peer overview based mostly on machine-learning requirements to refine the venture and information future concepts.

Critics on Hacker News, a web-based discussion board identified for its tech-savvy neighborhood, have raised considerations about The AI Scientist and query if present AI fashions can carry out true scientific discovery. Whereas the discussions there are casual and never an alternative choice to formal peer overview, they supply insights which can be helpful in mild of the magnitude of Sakana’s unverified claims.

“As a scientist in educational analysis, I can solely see this as a foul factor,” wrote a Hacker Information commenter named zipy124. “All papers are based mostly on the reviewers belief within the authors that their information is what they are saying it’s, and the code they submit does what it says it does. Permitting an AI agent to automate code, information or evaluation, necessitates {that a} human should completely test it for errors … this takes as lengthy or longer than the preliminary creation itself, and solely takes longer if you weren’t the one to write down it.”

Critics additionally fear that widespread use of such methods might result in a flood of low-quality submissions, overwhelming journal editors and reviewers—the scientific equal of AI slop. “This looks as if it should merely encourage educational spam,” added zipy124. “Which already wastes useful time for the volunteer (unpaid) reviewers, editors and chairs.”

And that brings up one other level—the standard of AI Scientist’s output: “The papers that the mannequin appears to have generated are rubbish,” wrote a Hacker Information commenter named JBarrow. “As an editor of a journal, I’d doubtless desk-reject them. As a reviewer, I’d reject them. They include very restricted novel information and, as anticipated, extraordinarily restricted quotation to related works.”