Extracting related information from structured tables required greater than a normal RAG method. We enhanced immediate engineering with listed time period strategies, contextual row retrieval, and dynamic few-shot examples to generate dependable Pandas queries, making our system each correct and environment friendly.

Co-authored by Michael Leshchinsky

Clalit is Israel’s largest Well being Upkeep Group — it serves each because the insurer and because the well being supplier for over 4.5M members throughout Israel. As you could anticipate, a corporation as giant as this has lots of helpful info that ought to be accessible to all its prospects and workers — lists of medical suppliers, sufferers’ eligibilities, details about medical assessments and procedures, and rather more. Sadly, this info is unfold throughout a number of sources and methods, making it fairly troublesome for the end-user to fetch the precise piece of data they’re in search of.

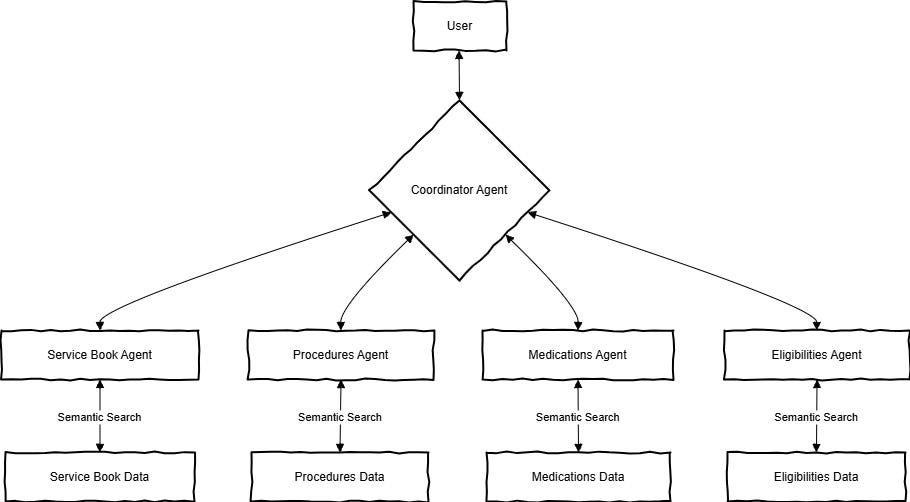

To unravel this we’ve determined to construct a multi-agent RAG system that may perceive which data area it wants to question, fetch related context from one or a number of sources, and supply the consumer with the right and full reply primarily based on this context.

Every agent is dedicated to a selected area and is a small RAG itself, so it will probably retrieve context and reply questions on its area. A coordinator agent understands the customers’ questions and decides which agent(s) it ought to handle. Then, it aggregates the solutions from all related brokers and compiles a solution for consumer.

No less than, that was the preliminary concept — in a short time we found that not all information sources are made equal and a few brokers ought to be one thing fully completely different from what one might name a classical RAG.

On this article, we are going to give attention to one such use case -the medical suppliers listing, also called the service guide. The service E-book is a desk with ~23K rows the place every row represents a medical supplier. The data for every supplier contains its handle and phone info, the professions and companies supplied (together with workers names), opening hours, and a few extra free-text feedback relating to the clinic’s accessibility and feedback.

Listed below are some pseudo-examples from the desk (displayed vertically as a result of giant variety of columns).

Our preliminary method was to transform every row to a textual content doc, index it, after which use a easy RAG to extract it. Nonetheless, we rapidly discovered that this method has a number of limitations:

– Consumer may anticipate a solution with a number of rows. For instance, take into account the query: “which pharmacies can be found in Tel-Aviv?”. What number of paperwork ought to our RAG retrieve? What a few query the place consumer explicitly defines what number of rows to anticipate?

– It could be extraordinarily troublesome for the retriever to distinguish between the completely different fields — a clinic in a sure metropolis could be known as after one other metropolis (e.g., Jerusalem clinic is positioned in Jerusalem Rd. in Tel-Aviv)

– As people, we’d most likely not “text-scan” a desk to extract info out of it. As a substitute, we would like to filter the desk in accordance with guidelines. There is no such thing as a purpose our software ought to behave otherwise.

As a substitute, we determined to go in a distinct route — ask the LLM to transform consumer’s query to laptop code that may extract the related rows.

This method is impressed by llama-index’s Pandas Query Engine. Briefly, the immediate for the LLM is constructed of the consumer’s question, df.head() to show the LLM how the desk is structured, and a few common directions.

question = """

Your job is to transform consumer's inquiries to a single line of Pandas code that may filter `df` and reply the consumer's question.

---

Listed below are the highest 5 rows from df:

{df}

---

- Your output will likely be a single code line with no extra textual content.

- The output should embrace: the clinic/heart identify, kind, handle, cellphone quantity(s), extra remarks, web site, and all columns together with the reply or that had been filtered.

- Think twice about every search time period!

---

USER'S QUESTION: {user_query}

PANDAS CODE:

"""response = llm.full(question.format(df=df.head(),

user_query=user_query)

)

strive:

result_df = eval(response.textual content)

besides:

result_df = pd.DataFrame()

Sounds straightforward sufficient, proper? Nicely, in observe we encountered a merciless actuality the place in most of our generated code, pandas threw an error for one purpose or one other, so the principle work solely began right here.

We had been capable of establish three important causes for generated code failure and use a number of dynamic prompt-engineering methods to deal with them:

1. Fixing an issue with in-exact phrases by including “thesaurus” of the phrases that could possibly be used to filter every column.

2. Offering the LLM with related rows from df as a substitute of arbitrary rows extracted by df.head().

3. Dynamic few-shotting with tailor-made code examples to assist the LLM generate right pandas code.

In lots of circumstances, the consumer’s query is probably not precisely what the desk “expects”. For instance, a consumer might ask for pharmacies in Tel Aviv, however the time period within the desk is Tel-Aviv-Jaffa. In one other case, the consumer could also be in search of an oftalmologist, as a substitute of an ophthalmologist, or for a heart specialist as a substitute of cardiology. It is going to be troublesome for the LLM to put in writing code that may cowl all these circumstances. As a substitute, it could be to retrieve the right phrases and embrace it within the immediate as strategies.

As you could think about, the service guide has a finite variety of phrases in every column — clinic kind could also be a hospital clinic, a non-public clinic, a primary-care clinic, and so forth. There’s a finite variety of metropolis names, medical professions and companies, and medical workers. The answer we used was to create a listing of all phrases (below every area), hold every one as a doc, after which index it as a vector.

Then, utilizing a retrieval solely engine, we extract ~3 gadgets for every search time period, and embrace these within the immediate. For instance, if the consumer’s query was “which pharmacies can be found in Tel Aviv?”, the next phrases could be retrieved:

– Clinic kind: Pharmacy; Main-care clinic; Hospital clinic

– Metropolis: Tel-Aviv-Jaffa, Tel-Sheva, Pharadis

– Professions and companies: Pharmacy, Proctology, Pediatrics

– …

The retrieved phrases embrace the true phrases we’re in search of (Pharmacy, Tel-Aviv-Jaffa), alongside some irrelevant phrases that will sound related (Tel-Sheva, proctology). All these phrases will likely be included within the immediate as strategies, and we anticipate the LLM to type out those that could be helpful.

from llama_index.core import VectorStoreIndex, Doc# Indexing all metropolis names

unique_cities = df['city'].distinctive()

cities_documents = [Document(text=city) for city in unique_cities]

cities_index = VectorStoreIndex.from_documents(paperwork=cities_documents)

cities_retriever = cities_index.as_retriever()

# Retrieving advised cities with the consumer's question

suggest_cities = ", ".be a part of([doc.text for doc in cities_retriever.retrieve(user_query)])

# Revised question

# Word the way it now contains strategies for related cities.

# In an identical method, we are able to add strategies for clinic varieties, medical professions, and many others.

question = """Your job is to transform consumer's inquiries to a single line of Pandas code that may filter `df` and reply the consumer's question.

---

Listed below are the highest 5 rows from df:

{df}

---

This are the most probably cities you are in search of: {suggest_cities}

---

- Your output will likely be a single code line with no extra textual content.

- The output should embrace: the clinic/heart identify, kind, handle, cellphone quantity(s), extra remarks, web site, and all columns together with the reply or that had been filtered.

- Think twice about every search time period!

---

USER'S QUESTION: {user_query}

PANDAS CODE:

"""

# Re-filtering the desk utilizing the brand new question

response = llm.full(question.format(df=df.head(),

suggest_cities=suggest_cities,

user_query=user_query)

)

strive:

result_df = eval(response.textual content)

besides:

result_df = pd.DataFrame()

By default, PandasQueryEngine contains the highest rows of df within the immediate by embedding df.head() into it, to permit the LLM to be taught the desk’s construction. Nonetheless, these high 5 rows are unlikely to be related to the consumer’s query. Think about we might correctly choose which rows are included within the immediate, such that the LLM won’t solely be taught the desk’s construction, but additionally see examples which can be related for the present activity.

To implement this concept, we used the preliminary method described above:

- We transformed every row to textual content and listed it as a separate doc

- Then, we used a retriever to extract the 5 most related rows towards the consumer’s question, and included them within the df instance throughout the immediate

- An essential lesson we be taught alongside the way in which was to incorporate some random, irrelevant examples, so the LLM may see adverse examples and understand it has to distinguish between them.

Right here’s some code instance:

# Indexing and retrieving advised metropolis names and different fields, as proven above

...# We convert every row to a doc.

# Word how we hold the index of every row - we are going to use it later.

rows = df.fillna('').apply(lambda x: ", ".be a part of(x), axis=1).to_dict()

rows_documents = [Document(text=v, metadata={'index_number': k}) for k, v in rows.items()]

# Index all examples

rows_index = VectorStoreIndex.from_documents(paperwork=rows_documents)

rows_retriever = rows_index.as_retriever(top_k_similarity=5)

# Generate instance df to incorporate in immediate

retrieved_indices = rows_retriever.retrieve(user_query)

relevant_indices = [i.metadata['index_number'] for i in retrieved_indices]

# Revised question

# This time we additionally add an instance to the immediate

question = """Your job is to transform consumer's inquiries to a single line of Pandas code that may filter `df` and reply the consumer's question.

---

Listed below are the highest 5 rows from df:

{df}

---

This are the most probably cities you are in search of: {suggest_cities}

---

- Your output will likely be a single code line with no extra textual content.

- The output should embrace: the clinic/heart identify, kind, handle, cellphone quantity(s), extra remarks, web site, and all columns together with the reply or that had been filtered.

- Think twice about every search time period!

---

Instance:

{relevant_example}

---

USER'S QUESTION: {user_query}

PANDAS CODE:

"""

# Re-filtering the desk utilizing the brand new question

# Word how we embrace each df.head() (as random rows) and the highest 5 related rows extracted

# from the retriever

response = llm.full(question.format(df=pd.concat([df.head(), df.loc[relevant_indices]]),

suggest_cities=suggest_cities,

user_query=user_query)

)

strive:

result_df = eval(response.textual content)

besides:

result_df = pd.DataFrame()

Take into account the next consumer’s query: Does H&C clinic permits service animals?

The generated code was:

df[

(df['clinic_name'].str.comprises('H&C')) &

(df['accessibility'].str.comprises('service animals'))

][['clinic_name', 'address', 'phone number', 'accessability']]

At first look, the code appears right. However… the consumer didn’t wish to filter upon the column accessibility — quite to examine its content material!

Fairly early within the course of we discovered {that a} few-shots method, wherein an instance query and code reply are included within the immediate, might resolve this problem. Nonetheless, we realized that there are simply too many various examples we are able to consider, every of them emphasizing a distinct idea.

Our resolution was to create a listing of various examples, and use a retriever to incorporate the one that’s the most just like the present consumer’s query. Every instance is a dictionary, wherein the keys are

- QUESTION: A possible consumer’s query

- CODE: The requested output code, as we’d have write it

- EXPLANATION: Textual content rationalization emphasizing ideas we want the LLM to think about whereas producing the code.

For instance:

{

'QUESTION': 'Does H&C clinic permits service animals?',

'CODE': "df[df['clinic_name'].str.comprises('H&C')][['clinic_name', 'address', 'phone number', 'accessability']]""",

'EXPLANATION': "When requested about whether or not a service exists or not in a sure clinic - you are not anticipated to filter upon the associated column. Quite, it is best to return it for the consumer to examine!"

}

We are able to now prolonged this examples guide every time we encounter a brand new idea we would like our system to know the best way to deal with:

# Indexing and retrieving advised metropolis names, as seen earlier than

...# Indexing and retrieveing high 5 relvant rows

...

# Index all examples

examples_documents = [Document(text=ex['QUESTION'],

metadata={okay: v for okay, v in ex.gadgets()})

for ex in examples_book

]

examples_index = VectorStoreIndex.from_documents(paperwork=cities_documents)

examples_retriever = examples_index.as_retriever(top_k_similarity=1)

# Retrieve related instance

relevant_example = examples_retriever.retrieve(user_query)

relevant_example = f"""Query: {relevant_example.textual content}

Code: {relevant_example.metadata['CODE']}

Clarification: {relevant_example.metadata['EXPLANATION']}

"""

# Revised question

# This time we additionally add an instance to the immediate

question = """Your job is to transform consumer's inquiries to a single line of Pandas code that may filter `df` and reply the consumer's question.

---

Listed below are the highest 5 rows from df:

{df}

---

This are the most probably cities you are in search of: {suggest_cities}

---

- Your output will likely be a single code line with no extra textual content.

- The output should embrace: the clinic/heart identify, kind, handle, cellphone quantity(s), extra remarks, web site, and all columns together with the reply or that had been filtered.

- Think twice about every search time period!

---

Instance:

{relevant_example}

---

USER'S QUESTION: {user_query}

PANDAS CODE:

"""

# Re-filtering the desk utilizing the brand new question

response = llm.full(question.format(df=pd.concat([df.head(), df.loc[relevant_indices]]),

suggest_cities=suggest_cities,

relevant_example=relevant_example,

user_query=user_query)

)

strive:

result_df = eval(response.textual content)

besides:

result_df = pd.DataFrame()

On this article, we tried to explain a number of heuristics that we used to create a extra particular, dynamic prompting to extract information from our desk. Utilizing pre-indexed information and retrievers, we are able to enrich our immediate and make it custom-made to the consumer’s present query. It’s price mentioning that regardless that this makes the agent extra advanced, the operating time remained comparatively low, since retrievers are usually quick (in comparison with textual content turbines, at the very least). That is an illustration of the entire stream: