Discover how the chosen samples seize extra assorted writing types and edge instances.

In some examples like cluster 1, 3, and eight the furthest level does simply appear like a extra assorted instance of the prototypical middle.

Cluster 6 is an attention-grabbing level, showcasing how some pictures are troublesome even for a human to guess what it’s. However you possibly can nonetheless make out how this might be in a cluster with the centroid as an 8.

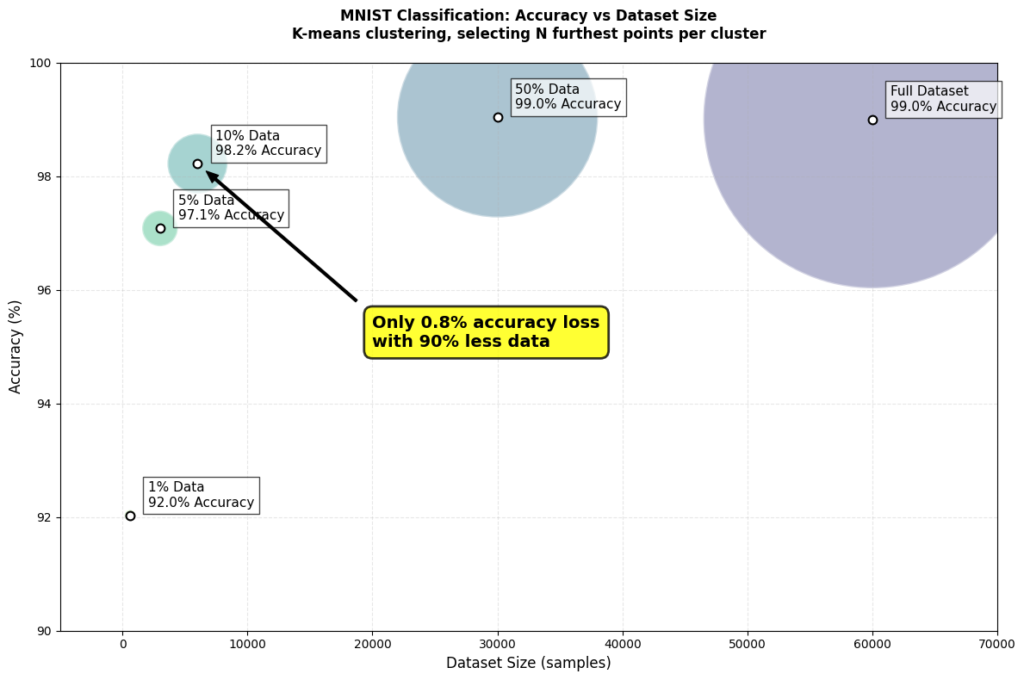

Latest analysis on neural scaling laws helps to elucidate why information pruning utilizing a “furthest-from-centroid” method works, particularly on the MNIST dataset.

Information Redundancy

Many coaching examples in massive datasets are extremely redundant.

Take into consideration MNIST: what number of almost similar ‘7’s do we actually want? The important thing to information pruning isn’t having extra examples — it’s having the appropriate examples.

Choice Technique vs Dataset Measurement

One of the vital attention-grabbing findings from the above paper is how the optimum information choice technique adjustments based mostly in your dataset measurement:

- With “loads” of knowledge : Choose tougher, extra numerous examples (furthest from cluster facilities).

- With scarce information: Choose simpler, extra typical examples (closest to cluster facilities).

This explains why our “furthest-from-centroid” technique labored so nicely.

With MNIST’s 60,000 coaching examples, we have been within the “ample information” regime the place deciding on numerous, difficult examples proved most helpful.

Inspiration and Objectives

I used to be impressed by these two current papers (and the truth that I’m a knowledge engineer):

Each discover varied methods we are able to use information choice methods to coach performant fashions on much less information.

Methodology

I used LeNet-5 as my mannequin structure.

Then utilizing one of many methods under I pruned the coaching dataset of MNIST and educated a mannequin. Testing was completed towards the complete take a look at set.

As a consequence of time constraints, I solely ran 5 assessments per experiment.

Full code and outcomes available here on GitHub.

Technique #1: Baseline, Full Dataset

- Customary LeNet-5 structure

- Educated utilizing 100% of coaching information

Technique #2: Random Sampling

- Randomly pattern particular person pictures from the coaching dataset

Technique #3: Okay-means Clustering with Completely different Choice Methods

Right here’s how this labored:

- Preprocess the pictures with PCA to cut back the dimensionality. This simply means every picture was lowered from 784 values (28×28 pixels) into solely 50 values. PCA does this whereas retaining an important patterns and eradicating redundant info.

- Cluster utilizing k-means. The variety of clusters was mounted at 50 and 500 in numerous assessments. My poor CPU couldn’t deal with a lot past 500 given all of the experiments.

- I then examined totally different choice strategies as soon as the information was cluster:

- Closest-to-centroid — these characterize a “typical” instance of the cluster.

- Furthest-from-centroid — extra consultant of edge instances.

- Random from every cluster — randomly choose inside every cluster.

- PCA lowered noise and computation time. At first I used to be simply flattening the pictures. The outcomes and compute each improved utilizing PCA so I saved it for the complete experiment.

- I switched from normal Okay-means to MiniBatchKMeans clustering for higher velocity. The usual algorithm was too gradual for my CPU given all of the assessments.

- Establishing a correct take a look at harness was key. Shifting experiment configs to a YAML, mechanically saving outcomes to a file, and having o1 write my visualization code made life a lot simpler.

Median Accuracy & Run Time

Listed here are the median outcomes, evaluating our baseline LeNet-5 educated on the complete dataset with two totally different methods that used 50% of the dataset.

Accuracy vs Run Time Full Outcomes

The under charts present the outcomes of my 4 pruning methods in comparison with the baseline in pink.

Key findings throughout a number of runs:

- Furthest-from-centroid persistently outperformed different strategies

- There undoubtedly is a candy spot between compute time and and mannequin accuracy if you wish to discover it in your use case. Extra work must be completed right here.

I’m nonetheless shocked that simply randomly decreasing the dataset provides acceptable outcomes if effectivity is what you’re after.

Future Plans

- Take a look at this on my second brain. I wish to advantageous tune a LLM on my full Obsidian and take a look at information pruning together with hierarchical summarization.

- Discover different embedding strategies for clustering. I can attempt coaching an auto-encoder to embed the pictures fairly than use PCA.

- Take a look at this on extra complicated and bigger datasets (CIFAR-10, ImageNet).

- Experiment with how mannequin structure impacts the efficiency of knowledge pruning methods.

These findings recommend we have to rethink our method to dataset curation:

- Extra information isn’t at all times higher — there appears to be diminishing returns to greater information/ larger fashions.

- Strategic pruning can truly enhance outcomes.

- The optimum technique depends upon your beginning dataset measurement.

As individuals begin sounding the alarm that we’re working out of knowledge, I can’t assist however surprise if much less information is definitely the important thing to helpful, cost-effective fashions.

I intend to proceed exploring the house, please attain out when you discover this attention-grabbing — blissful to attach and speak extra 🙂