Amidst the chaos and upheaval on the Social Safety Administration (SSA) brought on by Elon Musk’s so-called Department of Government Efficiency (DOGE), staff have now been requested to combine using a generative AI chatbot into their each day work.

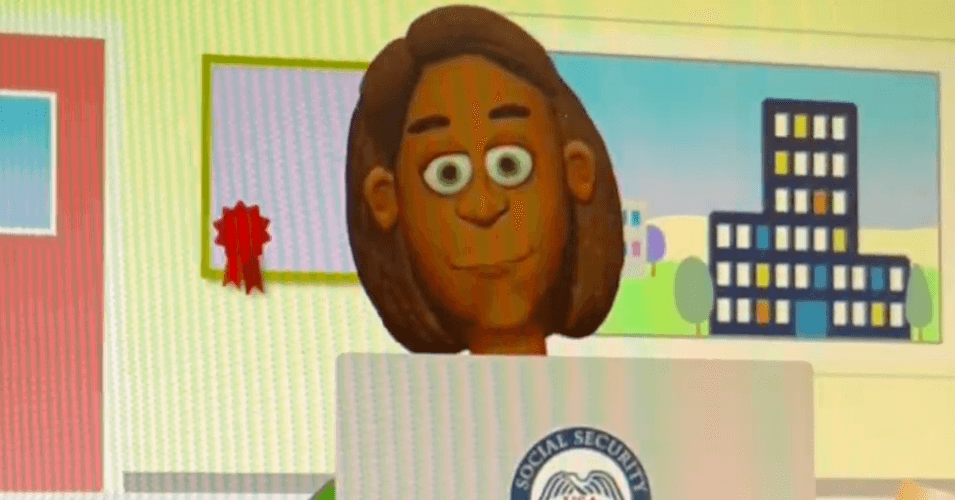

However earlier than any of them can use it, all of them want to observe a four-minute coaching video that includes an animated, four-fingered girl crudely drawn in a mode that might not look misplaced on web sites created within the early a part of this century.

Other than the Internet 1.0-era graphics employed, the video additionally fails at its major objective of informing SSA employees about probably the most essential points of utilizing the chatbot: Don’t use any personally identifiable info (PII) when utilizing the assistant.

“Our apologies for the oversight in our coaching video,” the SSA wrote in a truth sheet concerning the chatbot that was shared in an electronic mail to staff final week. The actual fact sheet, which WIRED has reviewed, provides that staff utilizing the chatbot ought to “chorus from importing PII to the chatbot.”

Work on the chatbot, referred to as the Company Assist Companion, started a few 12 months in the past, lengthy earlier than Musk or DOGE arrived on the company, one SSA worker with information of the app’s growth tells WIRED. The app has been in restricted testing since February, earlier than it was rolled out to all SSA staffers final week.

In an electronic mail asserting its availability to all employees this week, and reviewed by WIRED, the company wrote that the chatbot was “designed to help staff with on a regular basis duties and improve productiveness.”

A number of SSA staff, together with entrance workplace employees, inform WIRED that they solely ignored the e-mail concerning the chatbot as a result of they had been too busy with precise work, compensating for the reduced headcount at SSA offices. Others mentioned they’d briefly examined out the chatbot however had been instantly unimpressed.

“Truthfully, nobody has actually been speaking about it in any respect,” one supply tells WIRED. “I’m unsure most of my coworkers even watched the coaching video. I performed round with the chatbot a bit and several other of the responses I obtained from it had been extremely imprecise and/or inaccurate.”

One other supply mentioned their coworkers had been mocking the coaching video.

“You might hear my coworkers making enjoyable of the graphics. No one I do know is [using it]. It’s so clumsy and dangerous,” the supply says, including that they too got inaccurate info by the chatbot.