I that almost all firms would have constructed or applied their very own Rag brokers by now.

An AI data agent can dig via inner documentation — web sites, PDFs, random docs — and reply staff in Slack (or Groups/Discord) inside a couple of seconds. So, these bots ought to considerably scale back time sifting via data for workers.

I’ve seen a couple of of those in greater tech firms, like AskHR from IBM, however they aren’t all that mainstream but.

If you happen to’re eager to know how they’re constructed and the way a lot assets it takes to construct a easy one, that is an article for you.

I’ll undergo the instruments, strategies, and structure concerned, whereas additionally trying on the economics of constructing one thing like this. I’ll additionally embrace a piece on what you’ll find yourself focusing essentially the most on.

There’s additionally a demo on the finish for what this can seem like in Slack.

If you happen to’re already conversant in RAG, be happy to skip the subsequent part — it’s only a little bit of repetitive stuff round brokers and RAG.

What’s RAG and Agentic RAG?

Most of you who learn this can know what Retrieval-Augmented Technology (RAG) is however in case you’re new to it, it’s a option to fetch data that will get fed into the big language mannequin (LLM) earlier than it solutions the person’s query.

This permits us to offer related data from varied paperwork to the bot in actual time so it will probably reply the person appropriately.

This retrieval system is doing greater than easy key phrase search, because it finds related matches somewhat than simply precise ones. For instance, if somebody asks about fonts, a similarity search may return paperwork on typography.

Many would say that RAG is a reasonably easy idea to know, however the way you retailer data, the way you fetch it, and what sort of embedding fashions you employ nonetheless matter so much.

If you happen to’re eager to be taught extra about embeddings and retrieval, I’ve written about this here.

Right now, individuals have gone additional and primarily work with agent programs.

In agent programs, the LLM can resolve the place and the way it ought to fetch data, somewhat than simply having content material dumped into its context earlier than producing a response.

It’s necessary to keep in mind that simply because extra superior instruments exist doesn’t imply you need to at all times use them. You need to preserve the system intuitive and likewise preserve API calls to a minimal.

With agent programs the API calls will enhance, because it must a minimum of name one software after which make one other name to generate a response.

That mentioned, I actually just like the person expertise of the bot “going someplace” — to a software — to look one thing up. Seeing that move in Slack helps the person perceive what’s taking place.

However going with an agent or utilizing a full framework isn’t essentially the higher alternative. I’ll elaborate on this as we proceed.

Technical Stack

There’s a ton of choices for agent frameworks, vector databases, and deployment choices, so I’ll undergo some.

For the deployment possibility, since we’re working with Slack webhooks, we’re coping with event-driven structure the place the code solely runs when there’s a query in Slack.

To maintain prices to a minimal, we will use serverless capabilities. The selection is both going with AWS Lambda or selecting a brand new vendor.

Platforms like Modal are technically constructed to serve LLM fashions, however they work effectively for long-running ETL processes, and for LLM apps on the whole.

Modal hasn’t been battle-tested as a lot, and also you’ll discover that when it comes to latency, nevertheless it’s very clean and affords tremendous low cost CPU pricing.

I ought to word although that when setting this up with Modal on the free tier, I’ve had a couple of 500 errors, however that could be anticipated.

As for how one can decide the agent framework, that is fully non-compulsory. I did a comparability piece a couple of weeks in the past on open-source agentic frameworks that yow will discover here, and the one I neglected was LlamaIndex.

So I made a decision to offer it a strive right here.

The very last thing you’ll want to decide is a vector database, or a database that helps vector search. That is the place we retailer the embeddings and different metadata, so we will carry out similarity search when a person’s question is available in.

There are loads of choices on the market, however I feel those with the best potential are Weaviate, Milvus, pgvector, Redis, and Qdrant.

Each Qdrant and Milvus have fairly beneficiant free tiers for his or her cloud choices. Qdrant, I do know, permits us to retailer each dense and sparse vectors. Llamaindex, together with most agent frameworks, help many various vector databases so any can work.

I’ll strive Milvus extra sooner or later to check efficiency and latency, however for now, Qdrant works effectively.

Redis is a strong decide too, or actually any vector extension of your current database.

Price & time to construct

When it comes to time and value, it’s important to account for engineering hours, cloud, embedding, and enormous language mannequin (LLM) prices.

It doesn’t take that a lot time as well up a framework to run one thing minimal. What takes time is connecting the content material correctly, prompting the system, parsing the outputs, and ensuring it runs quick sufficient.

But when we flip to overhead prices, cloud prices to run the agent system is minimal for only one bot for one firm utilizing serverless capabilities as you noticed within the desk within the final part.

Nonetheless, for the vector databases, it should get costlier the extra information you retailer.

Each Zilliz and Qdrant Cloud has an excellent quantity of free tier on your first 1 to 5GBs of information, so until you transcend a couple of thousand chunks you could not pay for something.

You’ll begin paying although when you transcend the hundreds mark, with Weaviate being the most costly of the distributors above.

As for the embeddings, these are usually very low cost.

You’ll be able to see a desk beneath on utilizing OpenAI’s text-embedding-3-small with chunks of various sizes when you embed 1 to 10 million texts.

When individuals begin optimizing for embeddings and storage, they’ve often moved past embedding thousands and thousands of texts.

The one factor that issues essentially the most although is what massive language mannequin (LLM) you employ. It’s good to take into consideration API costs, since an agent system will sometimes name an LLM two to 4 instances per run.

For this method, I’m utilizing GPT-4o-mini or Gemini Flash 2.0, that are the most affordable choices.

So let’s say an organization is utilizing the bot a couple of hundred instances per day and every run prices us 2–4 API calls, we’d find yourself at round much less of a greenback per day and round $10–50 {dollars} per thirty days.

You’ll be able to see that switching to a costlier mannequin would enhance the month-to-month invoice by 10x to 100x. Utilizing ChatGPT is usually sponsored totally free customers, however while you construct your personal functions you’ll be financing it.

There shall be smarter and cheaper fashions sooner or later, so no matter you construct now will possible enhance over time. However begin small, as a result of prices add up and for easy programs like this you don’t want them to be distinctive.

The following part will get into how one can construct this method.

The structure (processing paperwork)

The system has two components. The primary is how we cut up up paperwork — what we name chunking — and embed them. This primary half is essential, as it should dictate how the agent solutions later.

So, to be sure you’re making ready all of the sources correctly, you’ll want to consider carefully about how one can chunk them.

If you happen to have a look at the doc above, you may see that we will miss context if we cut up the doc primarily based on headings but additionally on the variety of characters the place the paragraphs hooked up to the primary heading is cut up up for being too lengthy.

It’s good to be sensible about guaranteeing every chunk has sufficient context (however not an excessive amount of). You additionally want to ensure the chunk is hooked up to metadata so it’s simple to hint again to the place it was discovered.

That is the place you’ll spend essentially the most time, and actually, I feel there must be higher instruments on the market to do that intelligently.

I ended up utilizing Docling for PDFs, constructing it out to connect components primarily based on headings and paragraph sizes. For internet pages, I constructed a crawler that regarded over web page components to resolve whether or not to chunk primarily based on anchor tags, headings, or basic content material.

Bear in mind, if the bot is meant to quote sources, every chunk must be hooked up to URLs, anchor tags, web page numbers, block IDs, permalinks so the system can find the knowledge appropriately getting used.

Since a lot of the content material you’re working with is scattered and sometimes low high quality, I additionally determined to summarize texts utilizing an LLM. These summaries got totally different labels with greater authority, which meant they have been prioritized throughout retrieval.

There’s additionally the choice to push within the summaries in their very own instruments, and preserve deep dive data separate. Letting the agent resolve which one to make use of however it should look unusual to customers because it’s not intuitive habits.

Nonetheless, I’ve to emphasize that if the standard of the supply data is poor, it’s laborious to make the system work effectively.

For instance, if a person asks how an API request must be made and there are 4 totally different internet pages giving totally different solutions, the bot gained’t know which one is most related.

To demo this, I needed to do some handbook evaluation. I additionally had AI do deeper analysis across the firm to assist fill in gaps, after which I embedded that too.

Sooner or later, I feel I’ll construct one thing higher for doc ingestion — in all probability with the assistance of a language mannequin.

The structure (the agent)

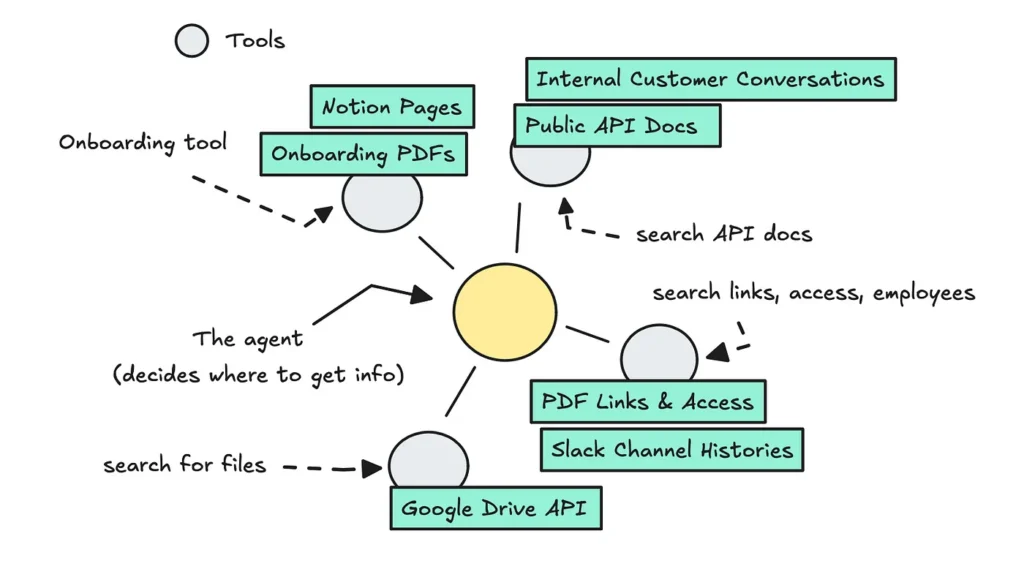

For the second half, the place we hook up with this information, we have to construct a system the place an agent can hook up with totally different instruments that comprise totally different quantities of information from our vector database.

We preserve to at least one agent solely to make it simple sufficient to manage. This one agent can resolve what data it wants primarily based on the person’s query.

It’s good to not complicate issues and construct it out to make use of too many brokers, otherwise you’ll run into points, particularly with these smaller fashions.

Though this may occasionally go in opposition to my very own suggestions, I did arrange a primary LLM operate that decides if we have to run the agent in any respect.

This was primarily for the person expertise, because it takes a couple of further seconds as well up the agent (even when beginning it as a background activity when the container begins).

As for how one can construct the agent itself, that is simple, as LlamaIndex does a lot of the work for us. For this, you should use the FunctionAgent, passing in numerous instruments when setting it up.

# Solely runs if the primary LLM thinks it's crucial

access_links_tool = get_access_links_tool()

public_docs_tool = get_public_docs_tool()

onboarding_tool = get_onboarding_information_tool()

general_info_tool = get_general_info_tool()

formatted_system_prompt = get_system_prompt(team_name)

agent = FunctionAgent(

instruments=[onboarding_tool, public_docs_tool, access_links_tool, general_info_tool],

llm=global_llm,

system_prompt=formatted_system_prompt

)The instruments have entry to totally different information from the vector database, and they’re wrappers across the CitationQueryEngine. This engine helps to quote the supply nodes within the textual content. We will entry the supply nodes on the finish of the agent run, which you’ll be able to connect to the message and within the footer.

To verify the person expertise is nice, you may faucet into the occasion stream to ship updates again to Slack.

handler = agent.run(user_msg=full_msg, ctx=ctx, reminiscence=reminiscence)

async for occasion in handler.stream_events():

if isinstance(occasion, ToolCall):

display_tool_name = format_tool_name(occasion.tool_name)

message = f"✅ Checking {display_tool_name}"

post_thinking(message)

if isinstance(occasion, ToolCallResult):

post_thinking(f"✅ Finished checking...")

final_output = await handler

final_text = final_output

blocks = build_slack_blocks(final_text, point out)

post_to_slack(

channel_id=channel_id,

blocks=blocks,

timestamp=initial_message_ts,

shopper=shopper

)Be sure that to format the messages and Slack blocks effectively, and refine the system immediate for the agent so it codecs the messages appropriately primarily based on the knowledge that the instruments will return.

The structure must be simple sufficient to know, however there are nonetheless some retrieval strategies we should always dig into.

Strategies you may strive

Lots of people will emphasize sure strategies when constructing RAG programs, they usually’re partially proper. You must use hybrid search together with some form of re-ranking.

The primary I’ll point out is hybrid search after we carry out retrieval.

I discussed that we use semantic similarity to fetch chunks of information within the varied instruments, however you additionally must account for instances the place precise key phrase search is required.

Simply think about a person asking for a selected certificates identify, like CAT-00568. In that case, the system wants to seek out precise matches simply as a lot as fuzzy ones.

With hybrid search, supported by each Qdrant and LlamaIndex, we use each dense and sparse vectors.

# when establishing the vector retailer (each for embedding and fetching)

vector_store = QdrantVectorStore(

shopper=shopper,

aclient=async_client,

collection_name="knowledge_bases",

enable_hybrid=True,

fastembed_sparse_model="Qdrant/bm25"

)Sparse is ideal for precise key phrases however blind to synonyms, whereas dense is nice for “fuzzy” matches (“advantages coverage” matches “worker perks”) however they’ll miss literal strings like CAT-00568.

As soon as the outcomes are fetched, it’s helpful to use deduplication and re-ranking to filter out irrelevant chunks earlier than sending them to the LLM for quotation and synthesis.

reranker = LLMRerank(llm=OpenAI(mannequin="gpt-3.5-turbo"), top_n=5)

dedup = SimilarityPostprocessor(similarity_cutoff=0.9)

engine = CitationQueryEngine(

retriever=retriever,

node_postprocessors=[dedup, reranker],

metadata_mode=MetadataMode.ALL,

)This half wouldn’t be crucial in case your information have been exceptionally clear, which is why it shouldn’t be your predominant focus. It provides overhead and one other API name.

It’s additionally not crucial to make use of a big mannequin for re-ranking, however you’ll want to do a little analysis by yourself to determine your choices.

These strategies are simple to know and fast to arrange, so that they aren’t the place you’ll spend most of your time.

What you’ll truly spend time on

A lot of the stuff you’ll spend time on aren’t so attractive. It’s prompting, lowering latency, and chunking paperwork appropriately.

Earlier than you begin, you need to look into totally different immediate templates from varied frameworks to see how they immediate the fashions. You’ll spend fairly a little bit of time ensuring the system immediate is well-crafted for the LLM you select.

The second factor you’ll spend most of your time on is making it fast. I’ve regarded into inner instruments from tech firms constructing AI data brokers and located they often reply in about 8 to 13 seconds.

So, you want one thing in that vary.

Utilizing a serverless supplier is usually a downside right here due to chilly begins. LLM suppliers additionally introduce their very own latency, which is difficult to manage.

That mentioned, you may look into spinning up assets earlier than they’re used, switching to lower-latency fashions, skipping frameworks to scale back overhead, and customarily reducing the variety of API calls per run.

The very last thing, which takes an enormous quantity of labor and which I’ve talked about earlier than, is chunking paperwork.

If you happen to had exceptionally clear information with clear headers and separations, this half could be simple. However extra typically, you’ll be coping with poorly structured HTML, PDFs, uncooked textual content recordsdata, Notion boards, and Confluence notes — typically scattered and formatted inconsistently.

The problem is determining how one can programmatically ingest these paperwork so the system will get the complete data wanted to reply a query.

Simply working with PDFs, for instance, you’ll must extract tables and pictures correctly, separate sections by web page numbers or structure components, and hint every supply again to the proper web page.

You need sufficient context, however not chunks which might be too massive, or it will likely be more durable to retrieve the proper information later.

This type of stuff isn’t effectively generalized. You’ll be able to’t simply push it in and count on the system to know it — it’s important to suppose it via earlier than you construct it.

The right way to construct it out additional

At this level, it really works effectively for what it’s alleged to do, however there are a couple of items I ought to cowl (or individuals will suppose I’m simplifying an excessive amount of). You’ll need to implement caching, a option to replace the info, and long-term reminiscence.

Caching isn’t important, however you may a minimum of cache the question’s embedding in bigger programs to hurry up retrieval, and retailer latest supply outcomes for follow-up questions. I don’t suppose LlamaIndex helps a lot right here, however you need to have the ability to intercept the QueryTool by yourself.

You’ll additionally need a option to repeatedly replace data within the vector databases. That is the most important headache — it’s laborious to know when one thing has modified, so that you want some form of change-detection technique together with an ID for every chunk.

You could possibly simply use periodic re-embedding methods the place you replace a piece with totally different meta tags altogether (that is my most well-liked method as a result of I’m lazy).

The very last thing I need to point out is long-term reminiscence for the agent, so it will probably perceive conversations you’ve had previously. For that, I’ve applied some state by fetching historical past from the Slack API. This lets the agent see round 3–6 earlier messages when responding.

We don’t need to push in an excessive amount of historical past, for the reason that context window grows — which not solely will increase price but additionally tends to confuse the agent.

That mentioned, there are higher methods to deal with long-term reminiscence utilizing exterior instruments. I’m eager to put in writing extra on that sooner or later.

Learnings and so forth

After doing this now for a bit I’ve a couple of notes to share about working with frameworks and retaining it easy (that I personally don’t at all times comply with).

You be taught so much from utilizing a framework, particularly how one can immediate effectively and how one can construction the code. However sooner or later, working across the framework provides overhead.

For example, on this system, I’m bypassing the framework a bit by including an preliminary API name that decides whether or not to maneuver on to the agent and responds to the person rapidly.

If I had constructed this and not using a framework, I feel I may have dealt with that form of logic higher the place the primary mannequin decides what software to name instantly.

I haven’t tried this however I’m assuming this could be cleaner.

Additionally, LlamaIndex optimizes the person question, which it ought to, earlier than retrieval.

However typically it reduces the question an excessive amount of, and I must go in and repair it. The quotation synthesizer doesn’t have entry to the dialog historical past, so with that overly simplified question, it doesn’t at all times reply effectively.

With a framework, it’s additionally laborious to hint the place latency is coming from within the workflow since you may’t at all times see the whole lot, even with remark instruments.

Most builders suggest utilizing frameworks for fast prototyping or bootstrapping, then rewriting the core logic with direct calls in manufacturing.

It’s not as a result of the frameworks aren’t helpful, however as a result of sooner or later it’s higher to put in writing one thing you totally perceive that solely does what you want.

The final advice is to maintain issues so simple as attainable and decrease LLM calls (which I’m not even totally doing myself right here).

But when all you want is RAG and never an agent, keep on with that.

You’ll be able to create a easy LLM name that units the proper parameters within the vector DB. From the person’s standpoint, it’ll nonetheless seem like the system is “trying into the database” and returning related information.

If you happen to’re happening the identical path, I hope this was helpful.

There’s bit extra to it although. You’ll need to implement some form of analysis, guardrails, and monitoring (I’ve used Phoenix right here).

As soon as completed although, the outcome will seem like this:

If you happen to to comply with my writing, yow will discover me right here, on my website, or on LinkedIn.

I’ll attempt to dive deeper into agentic reminiscence, evals, and prompting over the summer time.

❤