Constructing and working production-grade agentic AI purposes requires extra than simply nice basis fashions (FMs). AI groups should handle advanced workflows, infrastructure and the total AI lifecycle – from prototyping to manufacturing.

But, fragmented tooling and rigid infrastructure pressure groups to spend extra time managing complexity than delivering innovation.

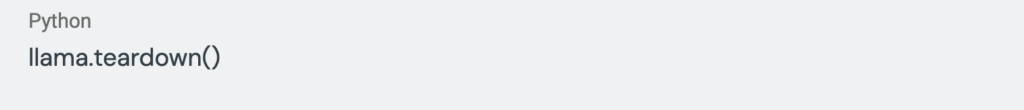

With the acquisition of Agnostiq and their open-source distributed computing platform, Covalent, DataRobot accelerates agentic AI improvement and deployment by unifying AI-driven decision-making, governance, lifecycle administration, and compute orchestration – enabling AI builders to deal with software logic as a substitute of infrastructure administration.

On this weblog, we’ll discover how these expanded capabilities assist AI practitioners construct and deploy agentic AI purposes in manufacturing sooner and extra seamlessly.

How DataRobot empowers agentic AI

- Enterprise course of particular AI-driven workflows. Mechanisms to translate enterprise use instances into enterprise context conscious agentic AI workflows and allow multi-agent frameworks to dynamically determine which capabilities, brokers, or instruments to name.

- The broadest suite of AI instruments and fashions. Construct, evaluate, and deploy one of the best agentic AI workflows.

- Greatest-in-class governance and monitoring. Governance (with AI registry) and monitoring for AI fashions, purposes, and autonomous brokers.

How Agnostiq enhances the stack

- Heterogeneous compute execution. Brokers run the place knowledge and purposes reside, making certain compatibility throughout various environments as a substitute of being confined to a single location.

- Optimized compute flexibility. Prospects can leverage all obtainable compute choices—on-prem, accelerated clouds, and hyperscalers — to optimize for availability, latency, and value.

- Orchestrator of orchestrators. Works seamlessly with standard frameworks like Run.ai, Kubernetes, and SLURM to unify workload execution throughout infrastructures.

The hidden complexity of constructing and managing production-grade agentic AI purposes

At present, many AI groups can develop easy prototypes and demos, however getting agentic AI purposes into manufacturing is a far better problem. Two hurdles stand in the way in which.

1. Constructing the applying

Creating a production-grade agentic AI software requires extra than simply writing code. Groups should:

- Translate enterprise wants into workflows.

- Experiment with completely different methods utilizing a mixture of LLMs, embedding fashions, Retrieval Augmented Era (RAG), fine-tuning methods, guardrails, and prompting strategies.

- Guarantee options meet strict high quality, latency, and value goals for particular enterprise use instances.

- Navigate infrastructure constraints by custom-coding workflows to run throughout cloud, on-prem, and hybrid environments.

This calls for not solely a broad set of generative AI tools and fashions that work collectively seamlessly with enterprise techniques but additionally infrastructure flexibility to keep away from vendor lock-in and bottlenecks.

2. Deploying and working at scale

Manufacturing AI purposes require:

- Provisioning and managing GPUs and different infrastructure.

- Monitoring efficiency, making certain reliability, and adjusting fashions dynamically.

- Enforcement of governance, entry controls, and compliance reporting.

Even with current options, it can take months to maneuver an software from improvement to manufacturing.

Current AI options fall brief

Most groups depend on one of many two methods – every with trade-offs

- Customized “construct your personal” (BYO) AI stacks: Provide extra management however require important handbook effort to combine instruments, configure infrastructure, and handle techniques – making it resource-intensive and unsustainable at scale.

- Hyperscaler AI platforms: Provide an ensemble of instruments for various elements of the AI lifecycle, however these instruments aren’t inherently designed to work collectively. AI groups should combine, configure, and handle a number of providers manually, including complexity and decreasing flexibility. As well as, they have an inclination to lack governance, observability, and usability whereas locking groups into proprietary ecosystems with restricted mannequin and power flexibility.

A sooner, smarter strategy to construct and deploy agentic AI purposes

AI groups want a seamless strategy to construct, deploy, and handle agentic AI purposes with out infrastructure complexity. With DataRobot’s expanded capabilities, they will streamline mannequin experimentation and deployment, leveraging built-in instruments to help real-world enterprise wants.

Key advantages for AI groups

- Turnkey, use-case particular AI apps: Customizable AI apps allow quick deployment of agentic AI purposes, permitting groups to tailor workflows to suit particular enterprise wants.

- Iterate quickly with the broadest suite of AI instruments. Experiment with {custom} and open-source generative AI fashions. Use totally managed RAG, Nvidia NeMo guardrails, and built-in analysis instruments to refine agentic AI workflows.

- Optimize AI workflows with built-in analysis. Choose one of the best agentic AI strategy in your use case with LLM-as-a-Decide, human-in-the-loop analysis, and operational monitoring (latency, token utilization, efficiency metrics).

- Deploy and scale with adaptive infrastructure. Set standards like value, latency, or availability and let the system allocate workloads throughout on-prem and cloud environments. Scale on-premises and develop to the cloud as demand grows with out handbook reconfiguration.

- Unified observability and compliance. Monitor all fashions – together with third-party – from a single pane of glass, monitor AI belongings within the AI registry, and automate compliance with audit-ready reporting.

With these capabilities, AI groups not have to decide on between velocity and adaptability. They’ll construct, deploy, and scale agentic AI purposes with much less friction and better management.

Let’s stroll via an instance of how these capabilities come collectively to allow sooner, extra environment friendly agentic AI improvement.

Orchestrating multi-agent AI workflows at scale

Refined multi-agent workflows are pushing the boundaries of AI functionality. Whereas a number of open-source and proprietary frameworks exist for constructing multi-agent techniques, one key problem stays ignored: orchestrating the heterogeneous compute and governance, and operational necessities of every agent.

Every member of a multi-agent workflow could require completely different backing LLMs — some fine-tuned on domain-specific knowledge, others multi-modal, and a few vastly completely different in dimension. For instance:

- A report consolidation agent would possibly solely want Llama 3.3 8B, requiring a single Nvidia A100 GPU.

- A main analyst agent would possibly want Llama 3.3 70B or 405B, demanding a number of A100 and even H100 GPUs.

Provisioning, configuring environments, monitoring, and managing communication throughout a number of brokers with various compute necessities is already advanced. As well as, operational and governance constraints can decide the place sure jobs should run. As an example, if knowledge is required to reside in sure knowledge facilities or nations.

Right here’s the way it works in motion.

Use case: A multi-agent inventory funding technique analyzer

Monetary analysts want real-time insights to make knowledgeable funding selections, however manually analyzing huge quantities of economic knowledge, information, and market indicators is gradual and inefficient.

A multi-agent AI system can automate this course of, offering sooner, data-driven suggestions.

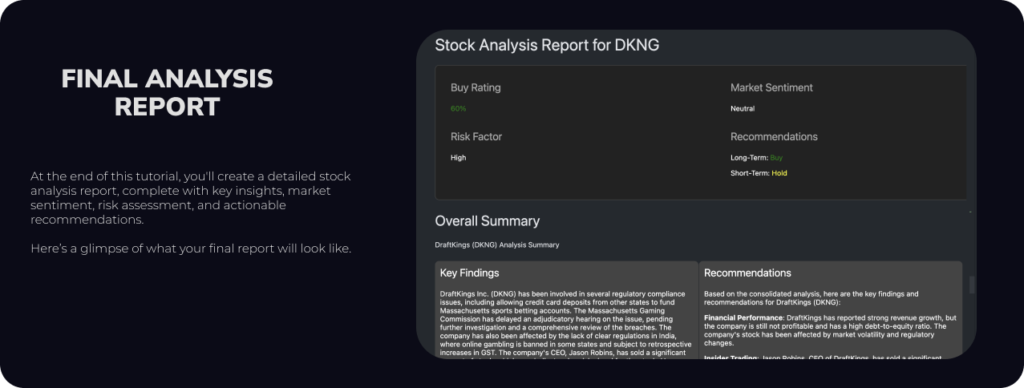

On this instance, we construct a Inventory Funding Technique Analyzer, a multi-agent workflow that:

- Generates a structured funding report with data-driven insights and a purchase score.

- Tracks market tendencies by gathering and analyzing real-time monetary information.

- Evaluates monetary efficiency, aggressive panorama, and danger components utilizing dynamic brokers.

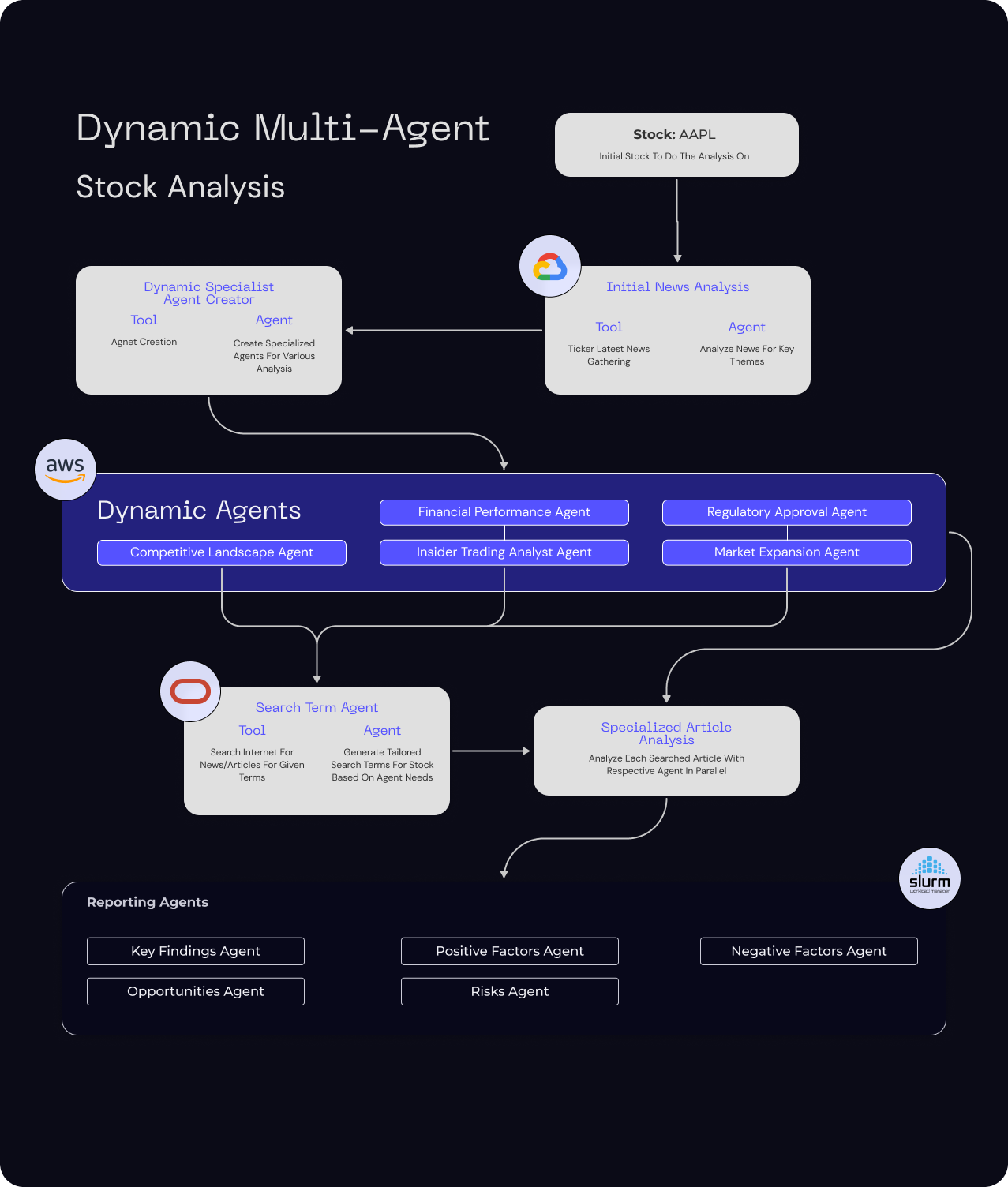

How dynamic agent creation works

Not like static multi-agent workflows, this method creates brokers on-demand primarily based on the real-time market knowledge. The first monetary analyst agent dynamically generates a cohort of specialised brokers, every with a novel position.

Workflow breakdown

- The first monetary analyst agent gathers and processes preliminary information experiences on a inventory of curiosity.

- It then generates specialised brokers, assigning them roles primarily based on real-time knowledge insights.

- Specialised brokers analyze various factors, together with:

– Monetary efficiency (stability sheets, earnings experiences)

– Aggressive panorama (trade positioning, market threats)

– Exterior market indicators (internet searches, information sentiment evaluation) - A set of reporting brokers compiles insights right into a structured funding report with a purchase/promote advice.

This dynamic agent creation permits the system to adapt in actual time, scaling sources effectively whereas making certain specialised brokers deal with related duties.

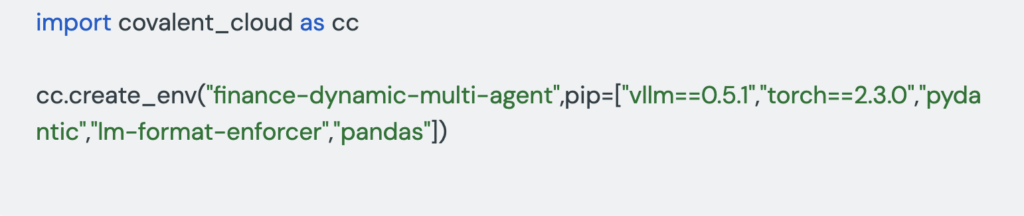

Infrastructure orchestration with Covalent

The mixed energy of DataRobot and Agnostiq’s Covalent platform eliminates the necessity to manually construct and deploy Docker photos. As an alternative, AI practitioners can merely outline their package deal dependencies, and Covalent handles the remainder.

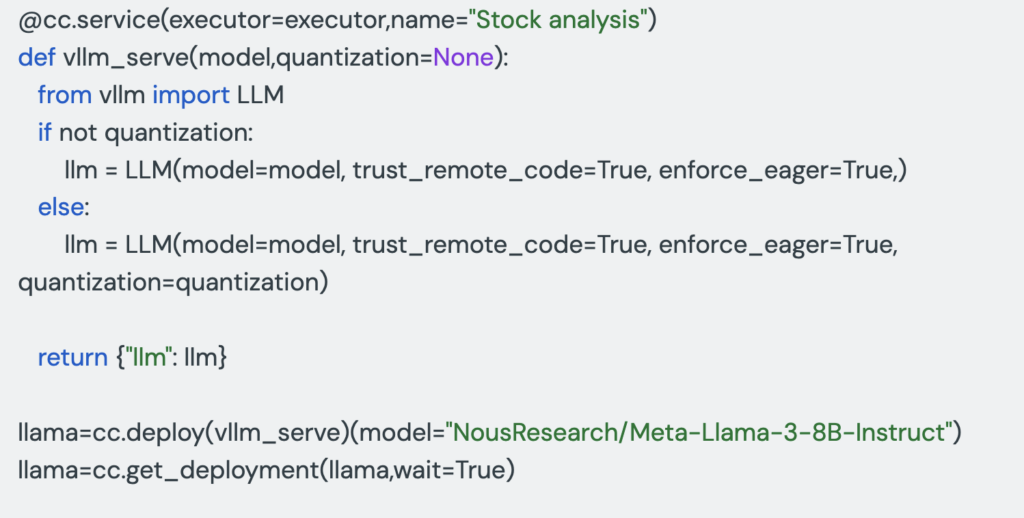

Step 1: Outline the compute surroundings

- No handbook setup required. Merely checklist dependencies and Covalent provisions the required surroundings.

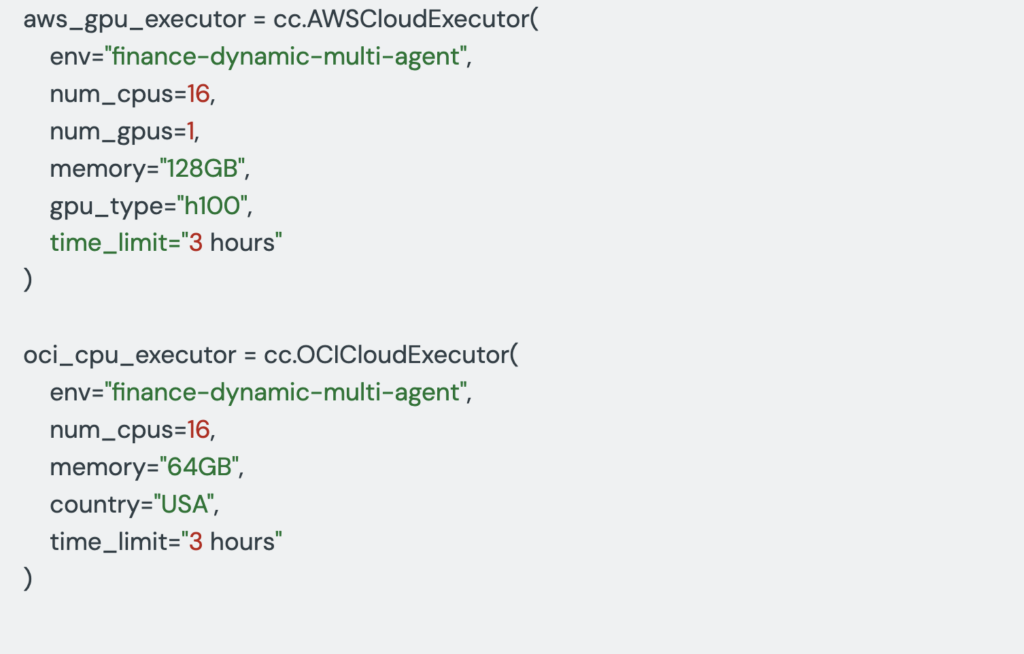

Step 2: Provision compute sources in a software-defined method

Every agent requires completely different {hardware}, so we outline compute sources accordingly:

Covalent automates compute provisioning, permitting AI builders to outline compute wants in Python whereas dealing with useful resource allocation throughout a number of cloud and on-prem environments.

Appearing as an “orchestrator of orchestrators” it bridges the hole between agentic logic and scalable infrastructure, dynamically assigning workloads to one of the best obtainable compute sources. This removes the burden of handbook infrastructure administration, making multi-agent purposes simpler to scale and deploy.

Mixed with DataRobot’s governance, monitoring, and observability, it offers groups the pliability to handle agentic AI extra effectively.

- Flexibility: Brokers utilizing giant fashions (e.g., Llama 3.3 70B) may be assigned to multi-GPU A100/H100 cases, whereas operating light-weight brokers on CPU-based infrastructure.

- Computerized scaling: Covalent provisions sources throughout clouds and on-prem as wanted, eliminating handbook provisioning.

As soon as compute sources are provisioned, brokers can seamlessly work together via a deployed inference endpoint for real-time decision-making.

Step 3: Deploy an AI inference endpoint

For real-time agent interactions, Covalent makes deploying inference endpoints seamless. Right here’s an inference service set-up for our main monetary analyst agent utilizing Llama 3.3 8B:

- Persistent inference service allows multi-agent interactions in actual time.

- Helps light-weight and large-scale fashions. Merely alter the execution surroundings as wanted.

Need to run a 405B parameter mannequin that requires 8x H100s? Simply outline one other executor and deploy it in the identical workflow.

Step 4: Tearing down infrastructure

As soon as the workflow completes, shutting down sources is easy.

- No wasted compute. Assets deallocate immediately after teardown.

- Simplified administration. No handbook cleanup required.

Scaling AI with out automation

Earlier than leaping into the implementation, contemplate what it will take to construct and deploy this software manually. Managing dynamic, semi-autonomous brokers at scale requires fixed oversight — groups should stability capabilities with guardrails, forestall unintended agent proliferation, and guarantee a transparent chain of duty.

With out automation, this can be a large infrastructure and operational burden. Covalent removes these challenges, enabling groups to orchestrate distributed purposes throughout any surroundings — with out vendor lock-in or specialised infra groups.

Give it a attempt.

Discover and customise the total working implementation in this detailed documentation.

A glance inside Covalent’s orchestration engine

Compute infra abstraction

Covalent lets AI practitioners outline compute necessities in Python — with out handbook containerization, provisioning, or scheduling. As an alternative of coping with uncooked infrastructure, customers specify abstracted compute ideas just like serverless frameworks.

- Run AI pipelines anyplace, from an on-prem GPU cluster to AWS P5.24xl cases — with minimal code modifications.

- Builders can entry cloud, on-prem, and hybrid compute sources via a single Python interface.

Cloud-agnostic orchestration: Scaling throughout distributed environments

Covalent operates as an orchestrator of the orchestrator layer above conventional orchestrators like Kubernetes, Run:ai and SLURM, enabling cross-cloud and multi-data heart orchestration.

- Abstracts clusters, not simply VMs. The primary era of orchestrators abstracted VMs into clusters. Covalent takes it additional by abstracting clusters themselves.

- Eliminates DevOps overhead. AI groups get cloud flexibility with out vendor lock-in, whereas Covalent automates provisioning and scaling.

Workflow orchestration for agentic AI pipelines

Covalent consists of native workflow orchestration constructed for high-throughput, parallel AI workloads.

- Optimizes execution throughout hybrid compute environments. Ensures seamless coordination between completely different fashions, brokers, and compute cases.

- Orchestrates advanced AI workflows. Ideally suited for multi-step, multi-model agentic AI purposes.

Designed for evolving AI workloads

Initially constructed for quantum and HPC purposes, Covalent now unifies various computing paradigms with a modular structure and plug-in ecosystem.

- Extensible to new HPC applied sciences & {hardware}. Ensures purposes stay future-proof as new AI {hardware} enters the market.

By integrating Covalent’s pluggable compute orchestrator, the DataRobot extends its capabilities as an infrastructure-agnostic AI platform, enabling the deployment of AI applications that require large-scale, distributed GPU workloads whereas remaining adaptable to rising HPC applied sciences & {hardware} distributors.

Bringing agentic AI to manufacturing with out the complexity

Agentic AI purposes introduce new ranges of complexity—from managing multi-agent workflows to orchestrating various compute environments. With Covalent now a part of DataRobot, AI groups can deal with constructing, not infrastructure.

Whether or not deploying AI purposes throughout cloud, on-prem, or hybrid environments, this integration supplies the pliability, scalability, and management wanted to maneuver from experimentation to manufacturing—seamlessly.

Large issues are forward for agentic AI. That is just the start of simplifying orchestration, governance, and scalability. Keep tuned for brand new capabilities coming quickly and sign up for a free trial to discover extra.

Concerning the writer

Dr. Ramyanshu (Romi) Datta is the Vice President of Product for AI Platform at DataRobot, liable for capabilities that allow orchestration and lifecycle administration of AI Brokers and Functions. Beforehand he was at AWS, main product administration for AWS’ AI Platforms – Amazon Bedrock Core Techniques and Generative AI on Amazon SageMaker. He was additionally GM for AWS’s Human-in-the-Loop AI providers. Previous to AWS, Dr. Datta has additionally held engineering and product roles at IBM and Nvidia. He obtained his M.S. and Ph.D. levels in Pc Engineering from the College of Texas at Austin, and his MBA from College of Chicago Sales space College of Enterprise. He’s a co-inventor of 25+ patents on topics starting from Synthetic Intelligence, Cloud Computing & Storage to Excessive-Efficiency Semiconductor Design and Testing.

Dr. Debadeepta Dey is a Distinguished Researcher at DataRobot, the place he leads dual-purpose strategic analysis initiatives. These initiatives deal with advancing the elemental state-of-the-art in Deep Studying and Generative AI, whereas additionally fixing pervasive issues confronted by DataRobot’s prospects, with the objective of enabling them to derive worth from AI. He accomplished his PhD in AI and Robotics from The Robotics Institute, Carnegie Mellon College in 2015. From 2015 to 2024, he was a researcher at Microsoft Analysis. His main analysis pursuits embrace Reinforcement Studying, AutoML, Neural Structure Search, and high-dimensional planning. He usually serves as Space Chair at ICML, NeurIPS, and ICLR, and has printed over 30 papers in top-tier AI and Robotics journals and conferences. His work has been acknowledged with a Greatest Paper of the 12 months Shortlist nomination on the Worldwide Journal of Robotics Analysis.

Nivetha Purusothaman is a Distinguished Engineer at DataRobot, the place she leads a number of engineering & product initiatives to help strategic partnerships. Previous to DataRobot, she spent 4 years within the blockchain trade main engineering groups and supporting blockchain initiatives like knowledge availability, restaking and so forth. She was additionally one of many lead engineers with AWS Relational Database Service & AWS Elastic MapReduce.

Will is a Principal Engineer at DataRobot, specializing in serverless and excessive efficiency computing infrastructure. He beforehand labored because the Head of Excessive Efficiency Computing at Agnostiq and as a Postdoctoral Fellow at Perimeter Institute, the place he developed novel GPU algorithms in computational geometry and quantum gravity. Will holds a Ph.D. in theoretical physics from Northeastern College.