Pace, scale, and collaboration are important for AI groups — however restricted structured information, compute sources, and centralized workflows typically stand in the best way.

Whether or not you’re a DataRobot buyer or an AI practitioner on the lookout for smarter methods to organize and mannequin massive datasets, new tools like incremental studying, optical character recognition (OCR), and enhanced information preparation will get rid of roadblocks, serving to you construct extra correct fashions in much less time.

Right here’s what’s new within the DataRobot Workbench experience:

- Incremental studying: Effectively mannequin massive information volumes with better transparency and management.

- Optical character recognition (OCR): Immediately convert unstructured scanned PDFs into usable information for predictive and generative AI take advantage of instances.

- Simpler collaboration: Work along with your crew in a unified house with shared entry to information prep, generative AI growth, and predictive modeling instruments.

Mannequin effectively on massive information volumes with incremental studying

Constructing fashions with massive datasets typically results in shock compute prices, inefficiencies, and runaway bills. Incremental studying removes these obstacles, permitting you to mannequin on massive information volumes with precision and management.

As an alternative of processing a whole dataset without delay, incremental studying runs successive iterations in your coaching information, utilizing solely as a lot information as wanted to realize optimum accuracy.

Every iteration is visualized on a graph (see Determine 1), the place you possibly can observe the variety of rows processed and accuracy gained — all based mostly on the metric you select.

Key benefits of incremental learning:

- Solely course of the info that drives outcomes.

Incremental studying stops jobs routinely when diminishing returns are detected, guaranteeing you employ simply sufficient information to realize optimum accuracy. In DataRobot, every iteration is tracked, so that you’ll clearly see how a lot information yields the strongest outcomes. You might be all the time in management and may customise and run extra iterations to get it excellent.

- Practice on simply the correct amount of information

Incremental studying prevents overfitting by iterating on smaller samples, so your mannequin learns patterns — not simply the coaching information.

- Automate advanced workflows:

Guarantee this information provisioning is quick and error free. Superior code-first customers can go one step additional and streamline retraining through the use of saved weights to course of solely new information. This avoids the necessity to rerun all the dataset from scratch, lowering errors from handbook setup.

When to finest leverage incremental studying

There are two key eventualities the place incremental studying drives effectivity and management:

- One-time modeling jobs

You may customise early stopping on massive datasets to keep away from pointless processing, stop overfitting, and guarantee information transparency.

- Dynamic, usually up to date fashions

For fashions that react to new data, superior code-first customers can construct pipelines that add new information to coaching units with out a full rerun.

In contrast to different AI platforms, incremental studying provides you management over massive information jobs, making them quicker, extra environment friendly, and less expensive.

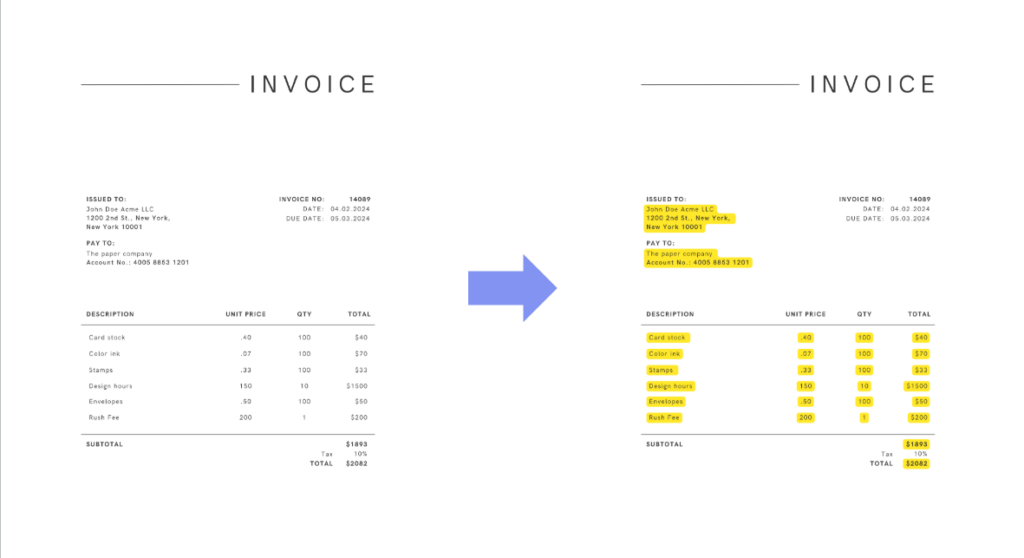

How optical character recognition (OCR) prepares unstructured information for AI

Gaining access to massive portions of usable information could be a barrier to constructing correct predictive fashions and powering retrieval-augmented technology (RAG) chatbots. That is very true as a result of 80-90% firm information is unstructured information, which might be difficult to course of. OCR removes that barrier by turning scanned PDFs right into a usable, searchable format for predictive and generative AI.

The way it works

OCR is a code-first functionality inside DataRobot. By calling the API, you possibly can remodel a ZIP file of scanned PDFs right into a dataset of text-embedded PDFs. The extracted textual content is embedded instantly into the PDF doc, able to be accessed by document AI features.

How OCR can energy multimodal AI

Our new OCR performance isn’t only for generative AI or vector databases. It additionally simplifies the preparation of AI-ready information for multimodal predictive fashions, enabling richer insights from various information sources.

Multimodal predictive AI information prep

Quickly flip scanned paperwork right into a dataset of PDFs with embedded textual content. This lets you extract key data and construct options of your predictive fashions utilizing document AI capabilities.

For instance, say you wish to predict working bills however solely have entry to scanned invoices. By combining OCR, doc textual content extraction, and an integration with Apache Airflow, you possibly can flip these invoices into a strong information supply to your mannequin.

Powering RAG LLMs with vector databases

Massive vector databases help extra correct retrieval-augmented technology (RAG) for LLMs, particularly when supported by bigger, richer datasets. OCR performs a key function by turning scanned PDFs into text-embedded PDFs, making that textual content usable as vectors to energy extra exact LLM responses.

Sensible use case

Think about constructing a RAG chatbot that solutions advanced worker questions. Worker advantages paperwork are sometimes dense and tough to go looking. By utilizing OCR to organize these paperwork for generative AI, you possibly can enrich an LLM, enabling staff to get quick, correct solutions in a self-service format.

WorkBench migrations that increase collaboration

Collaboration might be one of many largest blockers to quick AI supply, particularly when groups are pressured to work throughout a number of instruments and information sources. DataRobot’s NextGen WorkBench solves this by unifying key predictive and generative modeling workflows in a single shared atmosphere.

This migration means you can construct each predictive and generative fashions utilizing each graphical consumer interface (GUI) and code based notebooks and codespaces — all in a single workspace. It additionally brings highly effective information preparation capabilities into the identical atmosphere, so groups can collaborate on end-to-end AI workflows with out switching instruments.

Speed up information preparation the place you develop fashions

Knowledge preparation typically takes as much as 80% of an information scientist’s time. The NextGen WorkBench streamlines this course of with:

- Knowledge high quality detection and automatic information therapeutic: Determine and resolve points like lacking values, outliers, and format errors routinely.

- Automated characteristic detection and discount: Mechanically establish key options and take away low-impact ones, lowering the necessity for handbook characteristic engineering.

- Out-of-the-box visualizations of information evaluation: Immediately generate interactive visualizations to discover datasets and spot traits.

Enhance information high quality and visualize points immediately

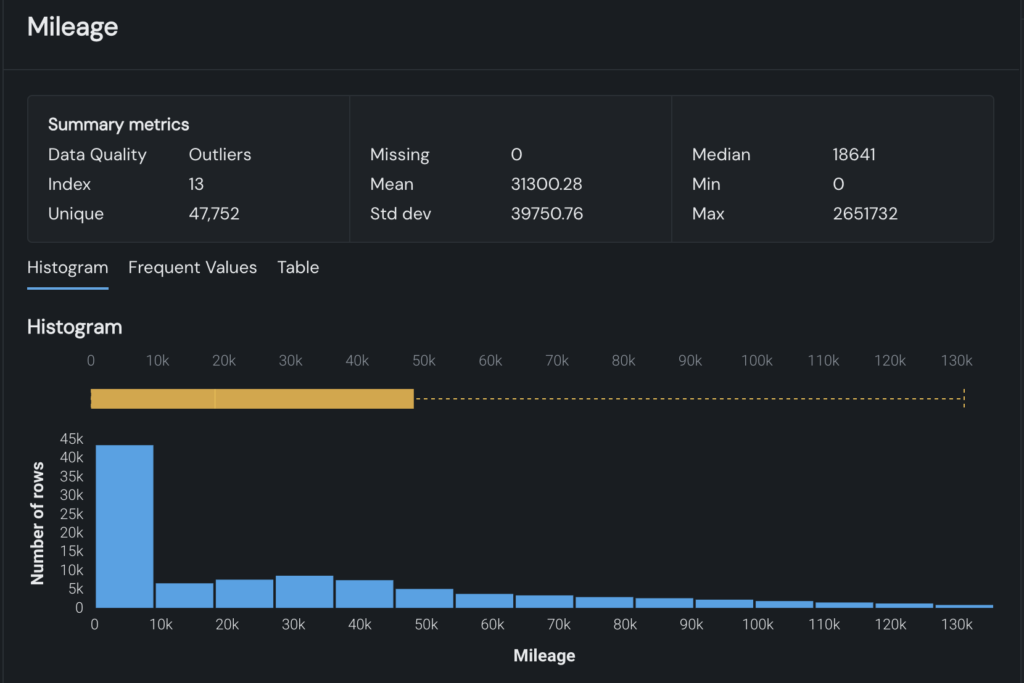

Knowledge high quality points like lacking values, outliers, and format errors can decelerate AI growth. The NextGen WorkBench addresses this with automated scans and visible insights that save time and scale back handbook effort.

Now, if you add a dataset, automated scans test for key information high quality points, together with:

- Outliers

- Multicategorical format errors

- Inliers

- Extra zeros

- Disguised lacking values

- Goal leakage

- Lacking photographs (in picture datasets solely)

- PII

These information high quality checks are paired with out-of-the-box EDA (exploratory information evaluation) visualizations. New datasets are routinely visualized in interactive graphs, supplying you with instantaneous visibility into information traits and potential points, with out having to construct charts your self. Determine 3 beneath demonstrates how high quality points are highlighted instantly throughout the graph.

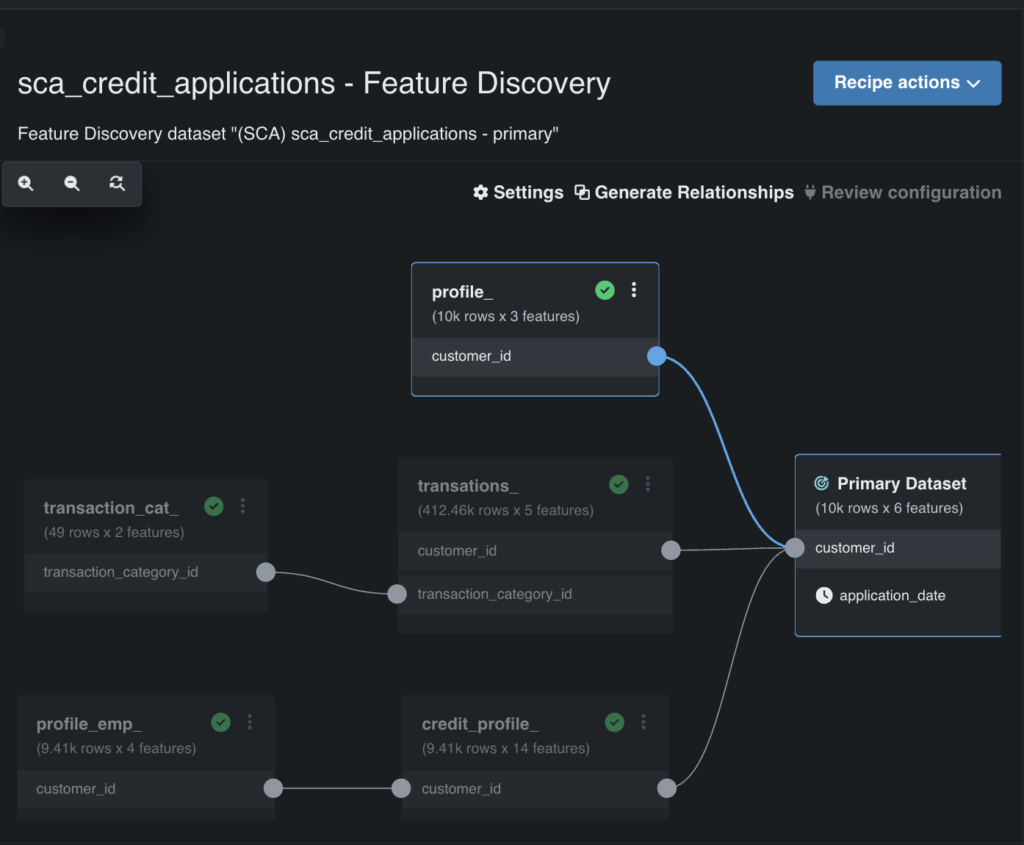

Automate characteristic detection and scale back complexity

Automated characteristic detection helps you simplify characteristic engineering, making it simpler to hitch secondary datasets, detect key options, and take away low-impact ones.

This functionality scans all of your secondary datasets to search out similarities — like buyer IDs (see Determine 4) — and lets you routinely be a part of them right into a coaching dataset. It additionally identifies and removes low-impact options, lowering pointless complexity.

You keep full management, with the flexibility to evaluate and customise which options are included or excluded.

Don’t let gradual workflows gradual you down

Knowledge prep doesn’t must take 80% of your time. Disconnected instruments don’t must gradual your progress. And unstructured information doesn’t must be out of attain.

With NextGen WorkBench, you’ve the instruments to maneuver quicker, simplify workflows, and construct with much less handbook effort. These options are already out there to you — it’s only a matter of placing them to work.

In the event you’re able to see what’s attainable, discover the NextGen expertise in a free trial.

Concerning the writer

Ezra Berger is a Senior Product Advertising and marketing Supervisor at DataRobot. He has over 9 years of expertise constructing content material and go-to-market methods for technical audiences in AI, information science, and engineering. Previous to DataRobot, Ezra held comparable roles at Snowflake, DoorDash, and Grid Dynamics. He holds a BA from the College of California, Los Angeles.