, you realize they’re stateless. If you happen to haven’t, consider them as having no short-term reminiscence.

An instance of that is the film Memento, the place the protagonist continually must be reminded of what has occurred, utilizing post-it notes with info to piece collectively what he ought to do subsequent.

To converse with LLMs, we have to continually remind them of the dialog every time we work together.

Implementing what we name “short-term reminiscence” or state is straightforward. We simply seize just a few earlier question-answer pairs and embody them in every name.

Lengthy-term reminiscence, however, is a completely completely different beast.

To verify the LLM can pull up the precise info, perceive earlier conversations, and join info, we have to construct some pretty advanced techniques.

This text will stroll via the issue, discover what’s wanted to construct an environment friendly system, undergo the completely different architectural decisions, and have a look at the open-source and cloud suppliers that may assist us out.

Pondering via a resolution

Let’s first stroll via the thought strategy of constructing reminiscence for LLMs, and what we’ll want for it to be environment friendly.

The very first thing we want is for the LLM to have the ability to pull up previous messages to inform us what has been mentioned. So we will ask it, “What was the title of that restaurant you instructed me to go to in Stockholm?” This might be primary info extraction.

If you happen to’re fully new to constructing LLM techniques, your first thought could also be to simply dump every reminiscence into the context window and let the LLM make sense of it.

This technique although makes it onerous for the LLM to determine what’s essential and what’s not, which might lead it to hallucinate solutions.

Your second thought could also be to retailer each message, together with summaries, and use hybrid search to fetch info when a question is available in.

This might be just like the way you construct customary retrieval techniques.

The difficulty with that is that after it begins scaling, you’ll run into reminiscence bloat, outdated or contradicting info, and a rising vector database that continually wants pruning.

You may also want to know when issues occur, to be able to ask, “When did you inform me about this restaurant?” This implies you’d want some stage of temporal reasoning.

This may increasingly drive you to implement higher metadata with timestamps, and probably a self-editing system that updates and summarizes inputs.

Though extra advanced, a self-editing system might replace info and invalidate them when wanted.

If you happen to hold pondering via the issue, you might also need the LLM to attach completely different info — carry out multi-hop reasoning — and acknowledge patterns.

So you possibly can ask it questions like, “What number of live shows have I been to this yr?” or “What do you suppose my music style is?” which can lead you to experiment with information graphs.

Organizing the resolution

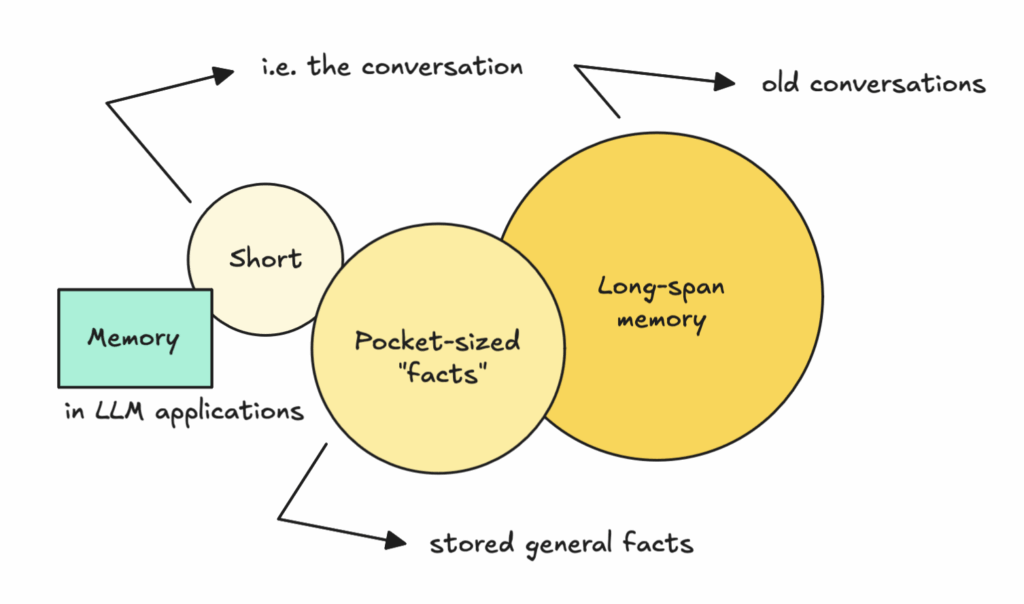

The truth that this has turn out to be such a big downside is pushing individuals to prepare it higher. I consider long-term reminiscence as two components: pocket-sized info and long-span reminiscence of earlier conversations.

For the primary half, pocket-sized info, we will have a look at ChatGPT’s reminiscence system for example.

To construct such a reminiscence, they doubtless use a classifier to determine if a message comprises a undeniable fact that must be saved.

Then they classify the actual fact right into a predefined bucket (resembling profile, preferences, or tasks) and both replace an current reminiscence if it’s related or create a brand new one if it’s not.

The opposite half, long-span reminiscence, means storing all messages and summarizing whole conversations to allow them to be referred to later. This additionally exists in ChatGPT, however identical to with pocket-sized reminiscence, you must allow it.

Right here, if you happen to construct this by yourself, it’s worthwhile to determine how a lot element to maintain, whereas being conscious of reminiscence bloat and the rising database we talked about earlier.

Customary architectural options

There are two fundamental structure decisions you possibly can go for right here if we have a look at what others are doing: vectors and information graphs.

I walked via a retrieval-based strategy at first. It’s often what individuals leap at when getting began. Retrieval makes use of a vector retailer (and infrequently sparse search), which simply means it helps each semantic and key phrase searches.

Retrieval is easy to start out with — you embed your paperwork and fetch based mostly on the consumer query.

However doing it this manner, as we talked about earlier, implies that each enter is immutable. Which means the texts will nonetheless be there even when the info have modified.

Issues which will come up right here embody fetching a number of conflicting info, which might confuse the agent. At worst, the related info is likely to be buried someplace within the piles of retrieved texts.

The agent additionally received’t know when one thing was mentioned or whether or not it was referring to the previous or the long run.

As we talked about beforehand, there are methods round this.

You may search previous reminiscences and replace them, add timestamps to metadata, and periodically summarize conversations to assist the LLM perceive the context round fetched particulars.

However with vectors, you additionally face the issue of a rising database. Ultimately, you’ll have to prune previous information or compress it, which can power you to drop helpful particulars.

If we have a look at Information Graphs (KGs), they symbolize info as a community of entities (nodes) and the relationships between them (edges), slightly than as unstructured textual content such as you get with vectors.

As an alternative of overwriting information, KGs can assign an invalid_at date to an previous reality, so you possibly can nonetheless hint its historical past. They use graph traversals to fetch info, which helps you to comply with relationships throughout a number of hops.

As a result of KGs can leap between related nodes and hold info up to date in a extra structured method, they are usually higher at temporal and multi-hop reasoning.

KGs do include their very own challenges although. As they develop, infrastructure turns into extra advanced, and you might begin to discover increased latency throughout deep traversals when the system has to look far to search out the precise info.

Whether or not the answer is vector- or KG-based, individuals often replace reminiscences slightly than simply hold including new ones, add within the capacity to set particular buckets that we noticed for the “pocket-sized” info and ceaselessly use LLMs to summarize and extract info from the messages earlier than ingesting them.

If we return to the unique aim — having each pocket-sized reminiscences and long-span reminiscence — you possibly can combine RAG and KG approaches to get what you need.

Present vendor options (plug’n play)

I’ll undergo just a few completely different impartial options that enable you arrange reminiscence, taking a look at how they work, which structure they use, and the way mature their frameworks are.

Constructing superior LLM functions remains to be very new, so most of those options have solely been launched within the final yr or two. Once you’re beginning out, it may be useful to take a look at how these frameworks are constructed to get a way of what you may want.

As talked about earlier, most of them fall into both KG-first or vector-first classes.

If we have a look at Zep (or Graphiti) first, a KG-based resolution, they use LLMs to extract, add, invalidate, and replace nodes (entities) and edges (relationships with timestamps).

Once you ask a query, it performs semantic and key phrase search to search out related nodes, then traverses to related nodes to fetch associated info.

If a brand new message is available in with contradicting info, it updates the node whereas retaining the previous reality in place.

This differs from Mem0, a vector-based resolution, which provides extracted info on prime of one another and makes use of a self-editing system to determine and overwrite invalid info fully.

Letta works in an analogous method but additionally consists of additional options like core reminiscence, the place it shops dialog summaries together with blocks (or classes) that outline what must be populated.

All options have the flexibility to set classes, the place we outline what must be captured with the system. As an illustration, if you happen to’re constructing a mindfulness app, one class might be “present temper” of consumer. These are the identical pocket-based buckets we noticed earlier in ChatGPT’s system.

One factor, that I talked about earlier than, is how the vector-first approaches has points with temporal and multi-hop reasoning.

For instance, if I say I’ll transfer to Berlin in two months, however beforehand talked about residing in Stockholm and California, will the system perceive that I now stay in Berlin if I ask months later?

Can it acknowledge patterns? With information graphs, the data is already structured, making it simpler for the LLM to make use of all out there context.

With vectors, as the data grows, the noise might get too robust for the system to attach the dots.

With Letta and Mem0, though extra mature usually, these two points can nonetheless happen.

For information graphs, the priority is about infrastructure complexity as they scale, and the way they handle rising quantities of data.

Though I haven’t examined all of them completely and there are nonetheless lacking items (like latency numbers), I need to point out how they deal with enterprise safety in case you’re wanting to make use of these internally together with your firm.

The one cloud possibility I discovered that’s SOC 2 Kind 2 licensed is Zep. Nonetheless, many of those might be self-hosted, through which case safety relies upon by yourself infra.

These options are nonetheless very new. It’s possible you’ll find yourself constructing your individual later, however I’d suggest testing them out to see how they deal with edge instances.

Economics of utilizing distributors

It’s nice to have the ability to add options to your LLM functions, however it’s worthwhile to needless to say this additionally provides prices.

I all the time embody a piece on the economics of implementing a expertise, and this time isn’t any completely different. It’s the very first thing I test when including one thing in. I want to know the way it will have an effect on the unit economics of the applying down the road.

Most vendor options will allow you to get began totally free. However when you transcend just a few thousand messages, the prices can add up shortly.

Bear in mind you probably have just a few hundred conversations per day in your group the pricing will begin to add up if you ship in each message via these cloud options.

Beginning with a cloud resolution could also be very best, after which switching to self-hosting as you develop.

You may as well strive a hybrid strategy.

For instance, implement your individual classifier to determine which messages are value storing as info to maintain prices down, whereas pushing the whole lot else into your individual vector retailer to be compressed and summarized periodically.

That mentioned, utilizing byte-sized info within the context window ought to beat pasting in a 5,000-token historical past chunk. Giving the LLM related info up entrance additionally helps scale back hallucinations and usually lowers LLM technology prices.

Notes

It’s essential to notice that even with reminiscence techniques in place, you shouldn’t anticipate perfection. These techniques nonetheless hallucinate or miss solutions at instances.

It’s higher to go in anticipating imperfections than to chase 100 % accuracy, you’ll save your self the frustration.

No present system hits good accuracy, not less than not but. Analysis reveals hallucinations are an inherent a part of LLMs. Even including reminiscence layers doesn’t eradicate this situation utterly.

I hope this train helped you see the best way to implement reminiscence in LLM techniques if you happen to’re new to it.

There are nonetheless lacking items, like how these techniques scale, the way you consider them, safety, and the way latency behaves in real-world settings.

You’ll have to check this out by yourself.

If you wish to comply with my writing you possibly can join with me at LinkedIn, or hold a take a look at for my work here, Medium or through my very own website.

I’m hoping to push out some extra articles on evals and prompting this summer season and would love the assist.

❤️