Expertise can bodily change our brains because it turns into an integral a part of every day life – however each time we outsource a operate, we threat letting our means atrophy away. What occurs when that means is essential pondering itself?

As a tail-end Gen-Xer, I’ve had the exceptional expertise of going from handwritten rolodex entries and curly-cord rotary telephones by way of to right this moment’s cloudborne contact lists, which immediately allow you to contact individuals in any of a dozen methods inside seconds, no matter kind of telephone or system you are holding.

My technology’s means to recollect telephone numbers is a little bit of a coccyx – the vestigial the rest of a construction now not required. And there are many these within the age of the smartphone.

The prime instance might be navigation. Studying a map, integrating it into your spatial psychological plan of an space, remembering key landmarks, freeway numbers and road names as navigation factors, after which pondering creatively to seek out methods round visitors jams and blockages is a ache within the butt, particularly when your telephone can do all of it on the fly, taking visitors, pace cameras and present roadworks all into consideration to optimize the plan on the fly.

However if you happen to do not use it, you lose it; the mind could be like a muscle in that regard. Outsourcing your spatial skills to Apple or Google has actual penalties – research have now proven in an “emphatic” method that elevated GPS use correlates to a steeper decline in spatial reminiscence. And spatial reminiscence seems to be so vital to cognition that another research project was capable of predict which suburbs usually tend to have the next proportion of Alzheimer’s sufferers to almost 84% accuracy simply by rating how navigationally “complicated” the world was.

The “use it or lose it” thought turns into significantly scary in 2025 once we have a look at generative Giant Language Mannequin (LLM) AIs like ChatGPT, Gemini, Llama, Grok, Deepseek, and tons of of others which are enhancing and proliferating at astonishing charges.

Amongst a thousand different makes use of, these AIs roughly let you begin outsourcing pondering itself. The idea of cognitive offloading, pushed to an absurd – however abruptly logical – excessive.

They’ve solely been broadly used for a few years at this level, throughout which they’ve exhibited an explosive charge of enchancment, however many individuals already discover LLMs an indispensable a part of every day life. They’re the last word low-cost or no-cost assistant, making encyclopedic (if unreliable) information accessible in a usable format, at speeds nicely past human thought.

AI adoption charges are off the charts; based on some estimates, humanity is jumping on the AI bandwagon significantly quicker than it obtained on board with the web itself.

However what are the mind results we are able to count on as the worldwide inhabitants continues to outsource an increasing number of of its cognitive operate? Does AI speed up humanity in the direction of Idiocracy quicker than Mike Decide might’ve imagined?

A crew of Microsoft researchers have made an try to get some early data onto the desk and reply these sorts of questions. Particularly, the examine tried to evaluate the impact of generative AIs on essential pondering.

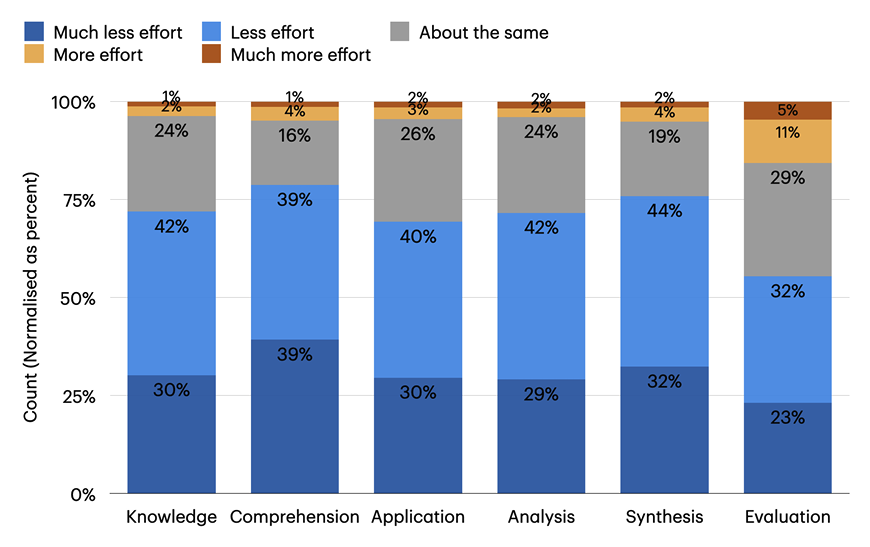

With out long-term knowledge at hand, or goal metrics to go by, the crew surveyed a gaggle of 319 “information employees,” who have been requested to self-assess their psychological processes over a complete of 936 duties. Members have been requested after they engaged essential pondering throughout these duties, how they enacted it, whether or not generative AI affected the trouble of essential pondering, and to what extent. They have been additionally requested to charge their confidence in their very own means to do these duties, and their confidence within the AI’s means.

The outcomes have been unsurprising; the extra the contributors believed within the AI’s functionality, the much less essential pondering they reported.

Apparently, the extra the contributors trusted their very own experience, the extra essential pondering they reported – however the nature of the essential pondering itself modified. Folks weren’t fixing issues themselves as a lot as checking the accuracy of the AI’s work, and “aligning the outputs with particular wants and high quality requirements.”

Microsoft Analysis

Does this level us towards a future as a species of supervisors over the approaching a long time? I doubt it; supervision itself strikes me because the kind of factor that’ll quickly be straightforward sufficient to automate at scale. And that is the brand new drawback right here; cognitive offloading was presupposed to get our minds off the small stuff so we are able to have interaction with the larger stuff. However I think AIs will not discover our “greater stuff” far more difficult than our smaller stuff.

Humanity turns into a God of the Gaps, and people gaps are already shrinking quick.

Maybe WALL-E obtained it improper; it is not the deterioration of our our bodies we have to be careful for because the age of automation dawns, however the deterioration of our brains. There is not any hover chair for that – however at the least there’s TikTok?

Let’s give the final phrase to DeepSeek R1 right this moment – that appears applicable, and admittedly I am unsure I’ve obtained any printable phrases to observe this. “I’m what occurs,” writes the Chinese language AI, “whenever you attempt to carve God from the wooden of your individual starvation.”

Supply: Microsoft Research